Home Lab 3

Now that I've got all the hardware up and running with Proxmox and pfsense, it's time to start deploying some workloads! Because I have plans to do some security research on the Kubernetes platform, that's what I'm going to try to use to deploy my apps. So, Step one is to create an atomic deployment method for a cluster on proxmox. Throughout working on this little project, I've found some gaps in the open source tooling out there to accomplish this. I may contribute those gaps to the projects in the future, if I do I'll be sure to document my process here! Because I'm in love with Terraform, I tend to over-use it... I'll be the first to admit that. But until something comes along that seems like a perfect replacement, I just get off too much on having all my infrastructure defined from a single point of execution. Again, I use a combination of Terraform and Packer to accomplish my goal:

Packer

First, we need a base OS image with cloud-init that we can then run scripts on to install Kubernetes and configure each image as a master node, or worker node.

ubuntu-base.pkr.hcl

source "proxmox-iso" "ubuntu-base" {

# ID

template_name = var.TEMPLATE_NAME

template_description = "Ubuntu 20.04 Server base install template, cloud init enabled"

# Proxmox Access configuration

proxmox_url = var.PM_URL

username = var.PM_USER

password = var.PM_PASS

node = var.PM_NODE

insecure_skip_tls_verify = true

# Base ISO File configuration

iso_file = var.UBUNTU_IMG_PATH

iso_storage_pool = "local-lvm"

iso_checksum = var.UBUNTU_IMG_CHECKSUM

# Cloud init enable

cloud_init = true

cloud_init_storage_pool = "local-lvm"

# SSH Config

ssh_password = var.SUDO_PASSWORD

ssh_username = var.SUDO_USERNAME

ssh_timeout = "10000s"

ssh_handshake_attempts = "20"

# System

memory = 4096

cores = 2

sockets = 1

cpu_type = "host"

os = "l26"

# Storage

disks {

type="scsi"

disk_size="8G"

storage_pool="local-lvm"

storage_pool_type="lvm"

}

# Add LAN network DHCP from pfSense @ 10.0.0.0/22

network_adapters {

model = "virtio"

bridge = "vmbr0"

vlan_tag = var.vmbr0_vlan_tag

}

# Remove installation media

unmount_iso = true

# Start this on system boot

onboot = false

# Boot commands

boot_wait = "5s"

http_directory = "http"

boot_command = [

"<enter><wait><enter><wait><f6><esc>",

"autoinstall ds=nocloud-net;s=http://{{ .HTTPIP }}:{{ .HTTPPort }}/",

"<wait2s><enter><wait2s><enter>"

]

# We don't need to do anything further in packer for now

# If we did, we would have to install qemu utils to discover IP & configure ssh communicator

communicator = "ssh"

qemu_agent = true

}

build {

// Load iso configuration

name = "ubuntu-base"

sources = ["source.proxmox-iso.ubuntu-base"]

}

Cloud init data is fed from the directory ./http, using a templated file from Terraform. Here's the Template:

#cloud-config

autoinstall:

version: 1

identity:

hostname: ${HOSTNAME}

password: ${SUDO_PASSWORD_HASH}

username: ${SUDO_USERNAME}

network:

version: 2

ethernets:

ens18:

dhcp4: true

dhcp-identifier: mac

ssh:

install-server: true

authorized-keys: ["${SSH_PUBLIC_KEY}","${SSH_PUBLIC_KEY_BOT}"]

packages:

- qemu-guest-agent

late-commands:

- 'echo "${SUDO_USERNAME} ALL=(ALL) NOPASSWD: ALL" > /target/etc/sudoers.d/${SUDO_USERNAME}'

- chmod 440 /target/etc/sudoers.d/${SUDO_USERNAME}

- apt-get -y install linux-headers-$(uname -r)

locale: en_US

keyboard:

layout: us

variant: ''

The following terraform resources use the template to create our cloud-init file:

// Create cloud-init subiquity file from template

data "template_file" "cloud-init-user-data" {

template = "${file("${path.module}/packer/http/user-data.tpl")}"

vars = {

HOSTNAME = "ubuntu2004"

SUDO_PASSWORD_HASH = "${var.PACKER_SUDO_HASH}"

SUDO_USERNAME = "${var.PACKER_SUDO_USER}"

SSH_PUBLIC_KEY = "${var.PACKER_SSH_PUB_KEY}"

SSH_PUBLIC_KEY_BOT = "${tls_private_key.ubuntu_base_key.public_key_openssh}"

}

}

// Write template result to http mount directory

resource "local_file" "user-data" {

content = "${data.template_file.cloud-init-user-data.rendered}"

filename = "${path.module}/packer/http/user-data"

}

Terraform

With our packer file declared, and all the data we need to feed it ready, we just need to use terraform to build the packer image and upload it to Proxmox for deployment:

packer.tf

// Upload ubuntu base image to Proxmox servers that will host k8s vm's

resource "null_resource" "ubuntu-base-image" {

provisioner "remote-exec" {

inline = [

"cd /var/lib/vz/template/iso",

"wget '${var.UBUNTU_IMG_URL}'"

]

connection {

type = "ssh"

user = "root"

password = "${var.PM_PASS}"

host = "192.168.2.25"

}

}

}

resource "null_resource" "ubuntu-base-image-0" {

provisioner "remote-exec" {

inline = [

"cd /var/lib/vz/template/iso",

"wget '${var.UBUNTU_IMG_URL}'"

]

connection {

type = "ssh"

user = "root"

password = "${var.PM_PASS}"

host = "pveworker0.salmon.sec"

}

}

}

// Generate ssh key for terraform to use

resource "tls_private_key" "ubuntu_base_key" {

algorithm = "RSA"

rsa_bits = 4096

}

resource "local_file" "ubuntu_base_private_key" {

directory_permission = "0700"

file_permission = "0600"

sensitive_content = "${tls_private_key.ubuntu_base_key.private_key_pem}"

filename = "${path.module}/.ssh/key"

}

// Create cloud-init subiquity file from template

data "template_file" "cloud-init-user-data" {

template = "${file("${path.module}/packer/http/user-data.tpl")}"

vars = {

HOSTNAME = "ubuntu2004"

SUDO_PASSWORD_HASH = "${var.PACKER_SUDO_HASH}"

SUDO_USERNAME = "${var.PACKER_SUDO_USER}"

SSH_PUBLIC_KEY = "${var.PACKER_SSH_PUB_KEY}"

SSH_PUBLIC_KEY_BOT = "${tls_private_key.ubuntu_base_key.public_key_openssh}"

}

}

// Write template result to http mount directory

resource "local_file" "user-data" {

content = "${data.template_file.cloud-init-user-data.rendered}"

filename = "${path.module}/packer/http/user-data"

}

// Execute Packer to build our pfsense image on Proxmox server

resource "null_resource" "packer-ubuntu-base-build" {

provisioner "local-exec" {

environment = {

PKR_VAR_TEMPLATE_NAME="ubuntu-base"

PKR_VAR_PM_URL="${var.PM_API_URL}"

PKR_VAR_PM_USER="${var.PM_USER}@pam"

PKR_VAR_PM_PASS="${var.PM_PASS}"

PKR_VAR_PM_NODE="${var.PM_NODE}"

PKR_VAR_UBUNTU_IMG_PATH="local:iso/ubuntu-20.04.2-live-server-amd64.iso"

PKR_VAR_UBUNTU_IMG_CHECKSUM="${var.UBUNTU_IMG_SUM}"

PKR_VAR_HOSTNAME="ubuntu2004"

PKR_VAR_SUDO_USERNAME="${var.PACKER_SUDO_USER}"

PKR_VAR_SUDO_PASSWORD="${var.PACKER_SUDO_PASS}"

PACKER_LOG=1

PKR_VAR_vmbr0_vlan_tag="4"

}

command = "cd ${path.module}/packer && packer build -only=ubuntu-base.proxmox-iso.ubuntu-base ."

}

depends_on = [

null_resource.ubuntu-base-image,

local_file.user-data

]

}

// Execute Packer to build our pfsense image on Proxmox server

resource "null_resource" "packer-ubuntu-base-build-0" {

provisioner "local-exec" {

environment = {

PKR_VAR_TEMPLATE_NAME="ubuntu-base-0"

PKR_VAR_PM_URL="${var.PM_API_URL}"

PKR_VAR_PM_USER="${var.PM_USER}@pam"

PKR_VAR_PM_PASS="${var.PM_PASS}"

PKR_VAR_PM_NODE="pveworker0"

PKR_VAR_UBUNTU_IMG_PATH="local:iso/ubuntu-20.04.2-live-server-amd64.iso"

PKR_VAR_UBUNTU_IMG_CHECKSUM="${var.UBUNTU_IMG_SUM}"

PKR_VAR_HOSTNAME="ubuntu2004"

PKR_VAR_SUDO_USERNAME="${var.PACKER_SUDO_USER}"

PKR_VAR_SUDO_PASSWORD="${var.PACKER_SUDO_PASS}"

PKR_VAR_vmbr0_vlan_tag="4"

PACKER_LOG=1

}

command = "cd ${path.module}/packer && packer build -only=ubuntu-base.proxmox-iso.ubuntu-base ."

}

depends_on = [

local_file.user-data,

null_resource.packer-ubuntu-base-build

]

}

packer_worker0.tf

// Upload ubuntu base image to Proxmox server

resource "null_resource" "ubuntu-base-image-0" {

provisioner "remote-exec" {

inline = [

"cd /var/lib/vz/template/iso",

"wget '${var.UBUNTU_IMG_URL}'"

]

connection {

type = "ssh"

user = "root"

password = "${var.PM_PASS}"

host = "pveworker0.salmon.sec"

}

}

}

// Execute Packer to build our pfsense image on Proxmox server

resource "null_resource" "packer-ubuntu-base-build-0" {

provisioner "local-exec" {

environment = {

PKR_VAR_PM_URL="${var.PM_API_URL}"

PKR_VAR_PM_USER="${var.PM_USER}@pam"

PKR_VAR_PM_PASS="${var.PM_PASS}"

PKR_VAR_PM_NODE="pveworker0"

PKR_VAR_UBUNTU_IMG_PATH="local:iso/ubuntu-20.04.2-live-server-amd64.iso"

PKR_VAR_UBUNTU_IMG_CHECKSUM="${var.UBUNTU_IMG_SUM}"

PKR_VAR_HOSTNAME="ubuntu2004"

PKR_VAR_SUDO_USERNAME="${var.PACKER_SUDO_USER}"

PKR_VAR_SUDO_PASSWORD="${var.PACKER_SUDO_PASS}"

PACKER_LOG=1

}

command = "cd ${path.module}/packer && packer build -only=ubuntu-base.proxmox-iso.ubuntu-base ."

}

depends_on = [

local_file.user-data

]

}

Now, we can create the machines on Proxmox from our base image, and leverage cloud-init to ssh in and execute scripts to install everything.

Scripts and Script Templates

1_update_host.tpl

#!/bin/bash

echo "${SUDO_PASSWORD}" | sudo -S apt update

sudo apt upgrade -y

# Likely we've grown the disk to beyond the 8Gb disk size from Packer image

# Let's resize the disk

# Rescan scii bus

sudo bash -c "echo '1' > /sys/class/block/sda/device/rescan"

# Expand partition

sudo sgdisk -e /dev/sda

sudo sgdisk -d 3 /dev/sda

sudo sgdisk -N 3 /dev/sda

sudo partprobe /dev/sda

# Expand pv

sudo pvresize /dev/sda3

# Expand LV

sudo lvextend -r -l +100%FREE /dev/ubuntu-vg/ubuntu-lv

2_install_packages.sh

#!/bin/bash

############################################

### Install kubelet, kubeadm and kubectl ###

############################################

sudo apt update

sudo apt -y install curl apt-transport-https

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt update

sudo apt -y install vim git curl wget kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

3_disable_swap.sh

#!/bin/bash

### Disable Swap ###

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

sudo swapoff -a

4_modprobe.sh

#!/bin/bash

###############################################

### Ensure Iptables can see bridged traffic ###

###############################################

sudo modprobe overlay

sudo modprobe br_netfilter

sudo tee /etc/sysctl.d/kubernetes.conf<<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

5_install_container_runtime.sh

#!/bin/bash

#########################

### Container Runtime ###

#########################

# Configure persistent loading of modules

sudo tee /etc/modules-load.d/containerd.conf <<EOF

overlay

br_netfilter

EOF

# Reload configs

sudo sysctl --system

# Install required packages

sudo apt install -y curl gnupg2 software-properties-common apt-transport-https ca-certificates

# Add Docker repo

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

# Install containerd

sudo apt update

sudo apt install -y containerd.io

# Configure containerd and start service

#sudo mkdir -p /etc/containerd

#sudo containerd config default /etc/containerd/config.toml

# restart containerd

sudo systemctl restart containerd

sudo systemctl enable containerd

sudo rm /etc/containerd/config.toml

sudo systemctl restart containerd

6_init_kubeadm_images.sh

#!/bin/bash

# Add static ref to k8s.gcr.io

#IP=$(dig +short k8s.gcr.io | awk 'FNR==2{ print $0 }')

#sudo sh -c 'echo "$IP k8s.gcr.io" >> /etc/hosts'

#IP=$(dig +short dl.k8s.io | awk 'FNR==2{ print $0 }')

#sudo sh -c "echo \"$IP dl.k8s.io\" >> /etc/hosts"

# Enable kubelet and pull config images (manually because kubeadm fails...)

sudo systemctl enable kubelet

while read url; do sudo ctr image pull $url; done << EOF

$(sudo kubeadm config images list $1)

EOF

7_kubeadm_master.tpl

#########################

### Master Node Init ###

#########################

# Create control plane

sudo kubeadm init \

--pod-network-cidr=${POD_NETWORK_CIDR} \

--control-plane-endpoint=${CONTROL_PLANE_ENDPOINT}

8_configure_kubectl.tpl

#!/bin/bash

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

9_install_cni_plugin.tpl

#!/bin/bash

### Install a Container Network Interface Plugin ###

# Install Calico CNI

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

Lovely, with all these scripts defined for installing and configuring Kubernetes on-top of Ubuntu we can actually deploy it all with Terraform:

master_node.tf

# Render any script templates required

data "template_file" "update_and_sudo" {

template = "${file("${path.module}/scripts/1_update_host.tpl")}"

vars = {

SUDO_PASSWORD = "${var.PACKER_SUDO_PASS}"

}

}

data "template_file" "kubeadm_master_init" {

template = "${file("${path.module}/scripts/7_kubeadm_master.tpl")}"

vars = {

POD_NETWORK_CIDR = "${var.POD_NETWORK_CIDR}"

CONTROL_PLANE_ENDPOINT = "${var.CONTROL_PLANE_ENDPOINT}"

}

}

# Use ae:d2:c0:6f:91:f0 for NIC on vmbr3 to get static assigned IP 10.0.64.4

# Hostname is k8master

# Domain is salmon.sec

# Gateway @ 10.0.64.1

resource "proxmox_vm_qemu" "k8master" {

# Clone and metadata config

name = "k8master"

target_node = var.PM_NODE

desc = "Kubernetes master node"

clone = "ubuntu-base"

# No need to full clone

full_clone = false

# PXE boot makes the chain fail, make sure we boot from disk!!

boot = "dcn"

bootdisk = "scsi0"

# Enable QEMU Guest Agent to help us get guest IP to ssh

# The template has installed this and agent is running on boot

agent = 1

# Start when server reboots

onboot = true

# System

memory = 8192

cores = 2

sockets = 2

cpu = "host"

qemu_os = "l26"

disk {

type = "scsi"

storage = "local-lvm"

size = "32G"

}

# WAN

network {

model = "virtio"

bridge = "vmbr3"

# I setup a static DHCP lease on my router for this MAC

# Master node @ 10.0.64.4

# k8master.salmon.sec

macaddr = "ae:d2:c0:6f:91:f0"

tag = 64

}

network {

model = "virtio"

bridge = "vmbr3"

tag = 120

}

// VLAN 130 With Ceph Private

network {

model = "virtio"

bridge = "vmbr2"

tag = 4

}

network {

model = "virtio"

bridge = "vmbr2"

tag = 130

}

// Attempt remote-exec

os_type = "cloud-init"

ipconfig0 = "ip=dhcp"

connection {

type = "ssh"

host = self.ssh_host

user = var.PACKER_SUDO_USER

private_key = tls_private_key.ubuntu_base_key.private_key_pem

port = "22"

}

// Set Hostname

provisioner "remote-exec" {

inline = [

# Execute kubeadm master init

"sudo hostnamectl set-hostname ${self.name}"

]

}

// Upload scripts with variables subbed

provisioner "file" {

content = data.template_file.update_and_sudo.rendered

destination = "/tmp/update_host.sh"

}

provisioner "file" {

content = data.template_file.kubeadm_master_init.rendered

destination = "/tmp/kubeadm_master.sh"

}

// Execute initial host update & cache sudo password

provisioner "remote-exec" {

inline = [

# Execute kubeadm master init

"chmod +x /tmp/update_host.sh",

"/tmp/update_host.sh",

]

}

// install kubectl/kubeadm/kubeapi, disable swap

// resolve modprobe iptables, install containerd

provisioner "remote-exec" {

scripts = [

"${path.module}/scripts/2_install_packages.sh",

"${path.module}/scripts/3_disable_swap.sh",

"${path.module}/scripts/4_modprobe.sh",

"${path.module}/scripts/5_install_container_runtime.sh",

"${path.module}/scripts/6_init_kubeadm_images.sh",

]

}

// Execute kubeadm master init

provisioner "remote-exec" {

inline = [

# Execute kubeadm master init

"chmod +x /tmp/kubeadm_master.sh",

"/tmp/kubeadm_master.sh",

]

}

// Configure kubectl

provisioner "remote-exec" {

scripts = [

"${path.module}/scripts/8_configure_kubectl.sh",

"${path.module}/scripts/9_install_cni_plugin.sh",

]

}

depends_on = [

null_resource.packer-ubuntu-base-build,

]

lifecycle {

ignore_changes = [

# After a reboot, this swaps as it begins owning it's own disk ontop of the template

full_clone,

define_connection_info,

disk,

]

}

}

// Download config file to use kubectl from localhost

resource "null_resource" "download_kube_config" {

provisioner "local-exec" {

command =<<EOT

echo "Creating .kube directory to store config file"

mkdir -p ${path.module}/.kube

echo "Downloading config file"

scp -i ${path.module}/.ssh/key -o 'StrictHostKeyChecking no' ${var.PACKER_SUDO_USER}@${var.CONTROL_PLANE_ENDPOINT}:/home/${var.PACKER_SUDO_USER}/.kube/config ${path.module}/.kube/config

EOT

interpreter = ["bash", "-c"]

}

depends_on = [

proxmox_vm_qemu.k8master,

local_file.ubuntu_base_private_key

]

}

output "kubectl_example" {

value = "You can now view cluster details: kubectl --kubeconfig .kube/config get nodes"

}

worker_node.tf

// Worker nodes also placed on master node hardware

resource "proxmox_vm_qemu" "k8worker" {

# Replication

count = 0

# Clone and metadata config

name = "k8worker${count.index}"

target_node = var.PM_NODE

desc = "Kubernetes worker node ${count.index}"

clone = "ubuntu-base"

# No need to full clone

full_clone = false

# PXE boot makes the chain fail, make sure we boot from disk!!

boot = "dcn"

bootdisk = "scsi0"

# Enable QEMU Guest Agent to help us get guest IP to ssh

# The template has installed this and agent is running on boot

agent = 1

# Start when server reboots

onboot = true

# System

memory = 4096

cores = 1

sockets = 2

cpu = "host"

qemu_os = "l26"

# Storage

disk {

type = "scsi"

storage = "local-lvm"

size = "32G"

}

# WAN

# with VLAN 120 for Ceph public

network {

model = "virtio"

bridge = "vmbr3"

tag = 64

}

network {

model = "virtio"

bridge = "vmbr3"

tag = 120

}

// VLAN 130 With Ceph Private

network {

model = "virtio"

bridge = "vmbr2"

tag = 4

}

network {

model = "virtio"

bridge = "vmbr2"

tag = 130

}

// Attempt remote-exec

os_type = "cloud-init"

ipconfig0 = "ip=dhcp"

connection {

type = "ssh"

host = self.ssh_host

user = var.PACKER_SUDO_USER

private_key = tls_private_key.ubuntu_base_key.private_key_pem

port = "22"

}

// Set Hostname

provisioner "remote-exec" {

inline = [

# Execute kubeadm master init

"sudo hostnamectl set-hostname ${self.name}"

]

}

// Upload scripts with variables subbed

provisioner "file" {

content = data.template_file.update_and_sudo.rendered

destination = "/tmp/update_host.sh"

}

// Execute initial host update & cache sudo password

provisioner "remote-exec" {

inline = [

# Execute kubeadm master init

"chmod +x /tmp/update_host.sh",

"/tmp/update_host.sh",

]

}

// install kubectl/kubeadm/kubeapi, disable swap

// resolve modprobe iptables, install containerd

provisioner "remote-exec" {

scripts = [

"${path.module}/scripts/2_install_packages.sh",

"${path.module}/scripts/3_disable_swap.sh",

"${path.module}/scripts/4_modprobe.sh",

"${path.module}/scripts/5_install_container_runtime.sh",

"${path.module}/scripts/6_init_kubeadm_images.sh",

]

}

// Upload bot private-key so we can connect to master node

provisioner "file" {

content = tls_private_key.ubuntu_base_key.private_key_pem

destination = "/home/${var.PACKER_SUDO_USER}/.ssh/botkey"

}

// Use our bot key to download kube config file

provisioner "remote-exec" {

inline = [

# Set permissions on key

"chmod 600 $HOME/.ssh/botkey && chmod 700 $HOME/.ssh",

# Copy cluster join command and execute

"JOIN=$(ssh -i $HOME/.ssh/botkey -o 'StrictHostKeyChecking no' ${var.PACKER_SUDO_USER}@k8master.salmon.sec 'kubeadm token create --print-join-command' )",

"sudo $JOIN",

# Remove key

"rm $HOME/.ssh/botkey"

]

}

depends_on = [

null_resource.packer-ubuntu-base-build,

proxmox_vm_qemu.k8master,

]

lifecycle {

ignore_changes = [

# After a reboot, this swaps as it begins owning it's own disk ontop of the template

full_clone,

define_connection_info,

disk,

]

}

}

// Worker nodes actually on workload server

resource "proxmox_vm_qemu" "k8worker-pveworker0" {

# Replication

count = 3

# Clone and metadata config

name = "pveworker0-k8worker${count.index}"

target_node = "pveworker0"

desc = "Kubernetes worker node ${count.index} on pveworker0"

clone = "ubuntu-base-0"

# No need to full clone

full_clone = false

# PXE boot makes the chain fail, make sure we boot from disk!!

boot = "dcn"

bootdisk = "scsi0"

# Enable QEMU Guest Agent to help us get guest IP to ssh

# The template has installed this and agent is running on boot

agent = 1

# Start when server reboots

onboot = true

# System

memory = 16384

cores = 4

sockets = 2

cpu = "host"

qemu_os = "l26"

# Storage

disk {

type = "scsi"

storage = "local-lvm"

size = "32G"

}

disk {

type = "virtio"

storage = "local-lvm"

size = "500G"

}

# WAN

# with VLAN 120 for Ceph public

ipconfig0 = "ip=dhcp"

network {

model = "virtio"

bridge = "vmbr1"

tag = 64

}

ipconfig1 = "ip=dhcp"

network {

model = "virtio"

bridge = "vmbr1"

tag = 120

}

// VLAN 130 With Ceph Private

ipconfig2 = "ip=dhcp"

network {

model = "virtio"

bridge = "vmbr0"

tag = 4

}

network {

model = "virtio"

bridge = "vmbr0"

tag = 130

}

// Attempt remote-exec

os_type = "cloud-init"

connection {

type = "ssh"

host = self.ssh_host

user = var.PACKER_SUDO_USER

private_key = tls_private_key.ubuntu_base_key.private_key_pem

port = "22"

}

// Set Hostname

provisioner "remote-exec" {

inline = [

# Execute kubeadm master init

"sudo hostnamectl set-hostname ${self.name}"

]

}

// Upload scripts with variables subbed

provisioner "file" {

content = data.template_file.update_and_sudo.rendered

destination = "/tmp/update_host.sh"

}

// Execute initial host update & cache sudo password

provisioner "remote-exec" {

inline = [

# Execute kubeadm master init

"chmod +x /tmp/update_host.sh",

"/tmp/update_host.sh",

]

}

// install kubectl/kubeadm/kubeapi, disable swap

// resolve modprobe iptables, install containerd

provisioner "remote-exec" {

scripts = [

"${path.module}/scripts/2_install_packages.sh",

"${path.module}/scripts/3_disable_swap.sh",

"${path.module}/scripts/4_modprobe.sh",

"${path.module}/scripts/5_install_container_runtime.sh",

"${path.module}/scripts/6_init_kubeadm_images.sh",

]

}

// Upload bot private-key so we can connect to master node

provisioner "file" {

content = tls_private_key.ubuntu_base_key.private_key_pem

destination = "/home/${var.PACKER_SUDO_USER}/.ssh/botkey"

}

// Use our bot key to download kube config file

provisioner "remote-exec" {

inline = [

# Set permissions on key

"chmod 600 $HOME/.ssh/botkey && chmod 700 $HOME/.ssh",

# Copy cluster join command and execute

"JOIN=$(ssh -i $HOME/.ssh/botkey -o 'StrictHostKeyChecking no' ${var.PACKER_SUDO_USER}@k8master.salmon.sec 'kubeadm token create --print-join-command' )",

"sudo $JOIN",

# Remove key

"rm $HOME/.ssh/botkey"

]

}

depends_on = [

null_resource.packer-ubuntu-base-build-0,

proxmox_vm_qemu.k8master,

]

lifecycle {

ignore_changes = [

# After a reboot, this swaps as it begins owning it's own disk ontop of the template

full_clone,

define_connection_info,

disk,

]

}

}

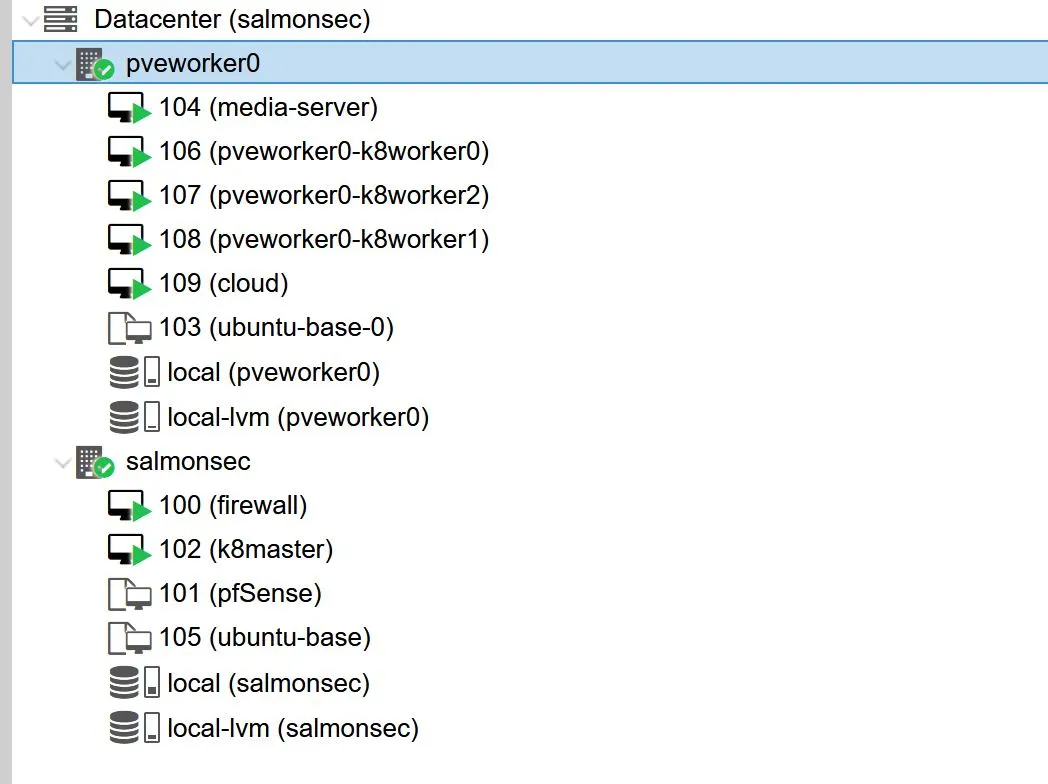

After running through all of this, we have a one master, three worker cluster:

The terraform module downloads the kube config file so we can immediately connect to our cluster via kubectl:

The terraform module downloads the kube config file so we can immediately connect to our cluster via kubectl:

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8master Ready control-plane,master 56d v1.21.1

pveworker0-k8worker0 Ready <none> 55d v1.21.1

pveworker0-k8worker1 Ready <none> 55d v1.21.1

pveworker0-k8worker2 Ready <none> 55d v1.21.1