Blog Refactor - Infra

To be honest, this blog has lived longer than I thought it would. I'm sort of proud that I've kept up with it for documenting my security & infrastructure related personal projects. I have however been trying to reduce my liabilities lately, and this blog is actually one of them. Even if it's not much, it's not producing anything for me and therefore it's a liability. The shop I added hasn't produced any sales, the $10 in donations I've gotten in the last year are all I've produced. So, like a good entrepreneur I need to reduce costs and pivot to capture some value. I'll be doing two main things:

- Move the site to google firebase and keep it under free tier so I'm not paying any hosting costs.

- Re-brand the site, place high-level categories to match the different reasons I write content. While doing this, I'd like to explore the types of people that may see value in this content and try to be thoughtful about what problems I can actually solve under each section for that person.

Moving to free hosting

I'm going to do this first so I can stop hemorrhaging money. All I'll be spending money on is the domain name, unless I exceed the following limits:

- Cloud Run

Free First 180,000 vCPU-seconds free per month First 360,000 GiB-seconds free per month 2 million requests free per month - Container Registry

No-cost up to 500MB of storage Then $0.10/GB/monthI should therefore do the following: - Move static content into Hosting storage, out of container image

- Take opportunity to upgrade to latest Golang image and fix whatever build issues arrise

- Refactor CI to write container images to Google Image Storage

- Deploy container image to Cloud Run

- Write path spec for static content for hosting

- Write path redirect for root to the container hosted on Cloud Run

- Add a policy to image storage that deletes old images if storage is close to 500Mb

- Profit

Static Content

Currently all static content is copied into the Dockerfile, and hosted via golang. When moving to Firebase, I simply placed my entire /static folder into /public and modified the static handler for when running locally to strip the /static from requests and serve from /public/static. When this runs in the cloud, it'll serve/do nothing as requests to /static will first hit the content from /public.

This quickly and easily solves the storage issue of having images within the Docker Container.

Upgrading

I Upgraded my system with:

choco upgrade golang -y

Then testing the blog, it ran without any errors. Good job google we like backward compatibility. This is why I love golang <3.

I uprevved the two stages of my Docker image to latest golang and latest alpine, ran a build. This time I got the following error:

=> ERROR [build 6/6] RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -ldflags '"-X main.version=${TAG}" -extldflags "-static"' -o main . 0.3s

------

> [build 6/6] RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -ldflags '"-X main.version=${TAG}" -extldflags "-static"' -o main .:

#12 0.277 invalid value "\"-X main.version=${TAG}\" -extldflags \"-static\"" for flag -ldflags: parameter may not start with quote character "

#12 0.277 usage: go build [-o output] [build flags] [packages]

#12 0.277 Run 'go help build' for details.

I changed the RUN line to RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -ldflags '-X main.version=${TAG}' -o main . and it was building fine again.

Writing images to google container storage

So this process flipped-flopped over the years. I'm now going to move back to building my images on prem and just pushing the image remotely rather than using Github actions to preform the build and push it to Digital Ocean.

So, my drone.yml becomes:

steps:

- name: build-push

image: plugins/gcr

settings:

repo: gcr.io/salmonsec/salmonsec-go

tags: latest

json_key:

from_secret: GOOGLE_SERVICE_ACCOUNT_KEY

Deploy image to cloud run

From google docs, to deploy to cloud run we can do:

gcloud run deploy --image gcr.io/PROJECT_ID/helloworld

So to test first, I simply deploy my image from my local CLI.

# Auth via service account

gcloud auth activate-service-account drone-ci-on-prem@salmonsec.iam.gserviceaccount.com --key-file=key.json --project=salmonsec

# Deploy

gcloud run deploy --image gcr.io/salmonsec/salmonsec-go:latest

This seems to have worked fine, so the CI for this looks like:

- name: deploy-service

image: google/cloud-sdk:alpine

commands:

- echo '$${GOOGLE_SERVICE_ACCOUNT_KEY}' > key.json

- gcloud auth activate-service-account drone-ci-on-prem@salmonsec.iam.gserviceaccount.com --key-file=key.json --project=salmonsec

- gcloud run deploy --image gcr.io/salmonsec/salmonsec-go:latest --cpu=1 --ingress=internal --max-instances=3 --memory=128Mi --min-instances=1 --port=8080

environment:

GOOGLE_SERVICE_ACCOUNT_KEY:

from_secret: GOOGLE_SERVICE_ACCOUNT_KEY

- name: deploy-assets

image: andreysenov/firebase-tools

commands:

- alias firebase="firebase --token $FIREBASE_TOKEN"

- firebase use salmonsec

- firebase deploy --only hosting

environment:

# Capture token with `firebase login:ci`

FIREBASE_TOKEN:

from_secret: FIREBASE_TOKEN

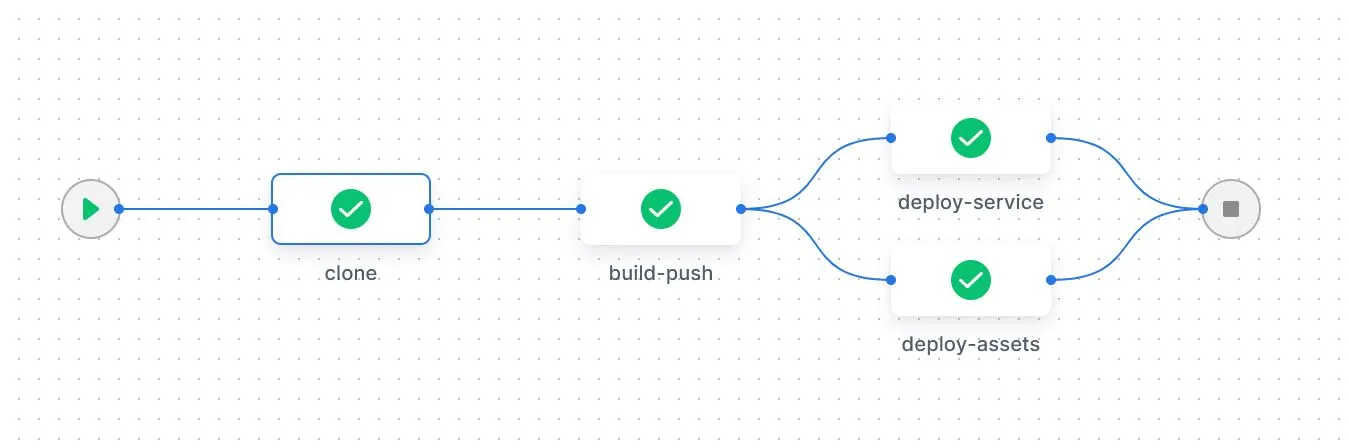

I added some tiny modifications to run steps in parallel and only do these tasks on master. The final result was:

---

kind: pipeline

name: salmonsec

type: docker

steps:

- name: build-push

image: plugins/gcr

settings:

repo: gcr.io/salmonsec/salmonsec-go

tags: latest

json_key:

from_secret: GOOGLE_SERVICE_ACCOUNT_KEY

when:

branch:

- master

- name: deploy-service

image: google/cloud-sdk:alpine

commands:

- echo $GOOGLE_SERVICE_ACCOUNT_KEY > key.json

- gcloud auth activate-service-account drone-ci-on-prem@salmonsec.iam.gserviceaccount.com --key-file=key.json --project=salmonsec

- gcloud run deploy salmonsec-go --image gcr.io/salmonsec/salmonsec-go:latest --ingress=all --cpu=1 --max-instances=3 --memory=128Mi --min-instances=1 --port=8080 --region=us-east4 --allow-unauthenticated

environment:

GOOGLE_SERVICE_ACCOUNT_KEY:

from_secret: GOOGLE_SERVICE_ACCOUNT_KEY

depends_on:

- build-push

when:

branch:

- master

- name: deploy-assets

image: andreysenov/firebase-tools:11.12.0-node-lts-alpine

commands:

- alias firebase="firebase --token $FIREBASE_TOKEN"

- firebase use salmonsec

- firebase deploy --only hosting

environment:

# Capture token with `firebase login:ci`

FIREBASE_TOKEN:

from_secret: FIREBASE_TOKEN

depends_on:

- build-push

when:

branch:

- master

Path spec for static hosting

the firebase.json for this project needs to do two things:

- Redirect any traffic to

/to the backend - Redirect any traffic to

/staticorads.txtto the deployed hosting If the router checks for a static hosting inpublicfirst, then this should work:

{

"hosting": {

"public": "public",

"ignore": [

"firebase.json",

"**/.*",

"**/node_modules/**"

],

"rewrites": [

{

"source": "/",

"run": {

"serviceId": "salmonsec-go",

"region" : "us-east2"

}

}

]

}

}

Alerting

Now, unlike my previous deployment that just had a fixed cost this infrastructure can and will autoscale and increase the cost if needed. This is great, but I want to stay within the free tiers if at all possible. At least until I'm getting enough traffic to actually justify it. Google does give you credits and all that, which is cool but they're going to randoly expire one day and my card will start getting charged. All I did here was setup an alert which warns me if I have ANY spend on the account (regardless of credits) so I can be alerted to investigate why that's the case.