What is Replicated?

Replicated allows software vendors to deploy into customer managed Kubernetes environments. Their value proposition is helping someone who cannot deploy On Premises today, get there, which should open a wider market for their products.

I'm looking to solve this problem for myself, so I figured I'd document my exploration of their product incase it helps someone else.

Feature Claims

Let's start by going through their landing page and reading through the marketing material. This should offer a nice window into how they've materialized the problem statement, and how they're positioning themselves to solve it in the market.

Replicated's landing page pitches 5 pillars of the product: Build, Sell, Install, Support and Secure.

build:

As a product manager, engineer, developer, or CTO, you’re concerned about building the best product possible. You want to get your new feature innovations to market to delight your customers and gain competitive advantage. You need to deliver your app releases securely wherever customers want to run them, make sure they run smoothly, and keep them updated to the latest versions.

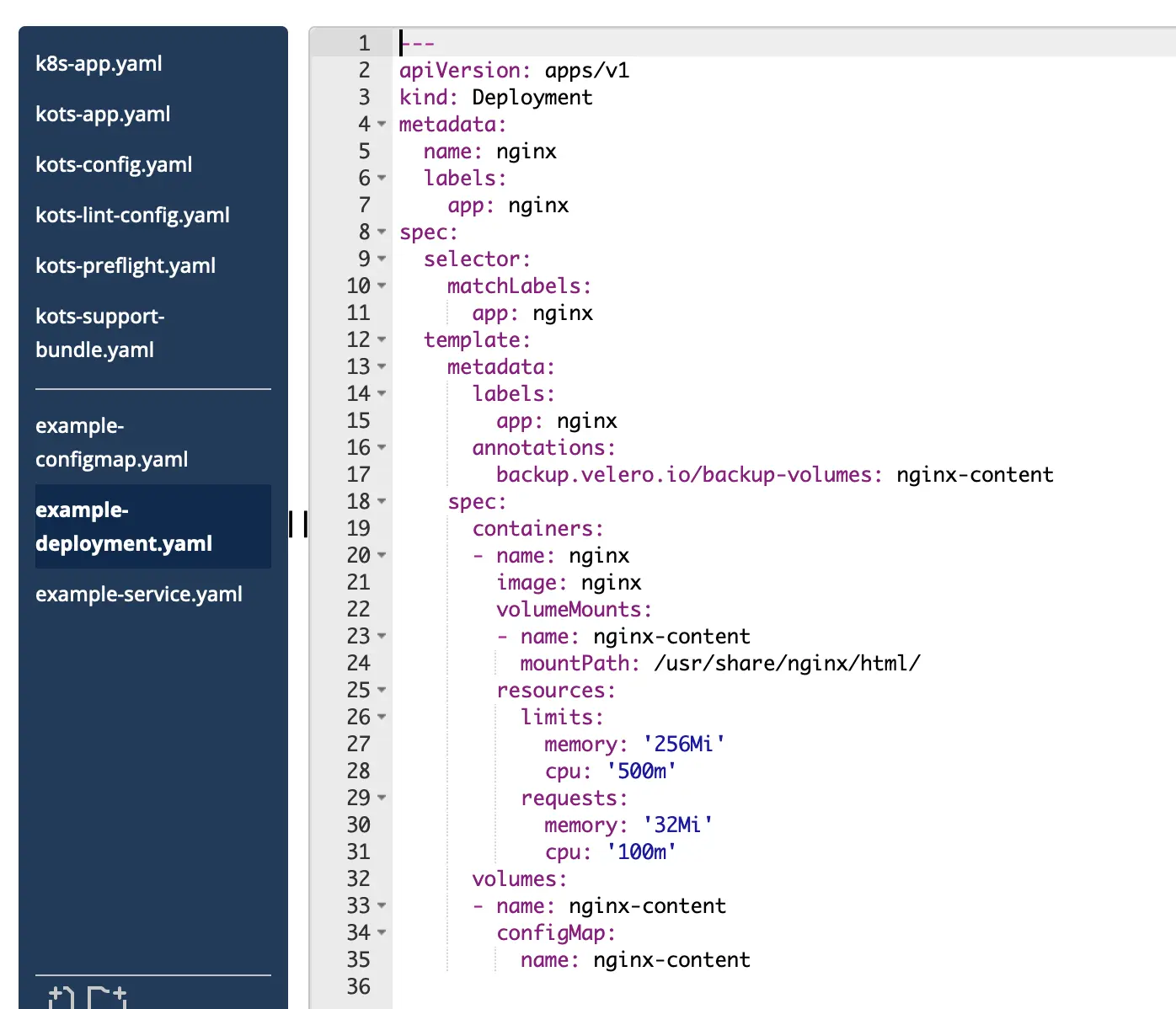

- They generate k8s manifests and a helm chart for your application

- They enforce a version schema and assign release channels to each customer

- They put in place feature gates for each release channel, allowing tiers for customers

- They enable automatic updates and patching

- They alert of CVE's

- Allow customers to control their data in their own environments

- Spin up test environments on cloud quickly for comprehensive testing

- Capture some basic product analytics

sell:

As a product manager, sales leader, CFO or chief revenue officer, you’re concerned about efficiently driving more revenue and increasing your addressable market. You want to meet your customers where they are and stop turning away business in on-prem, VPC, or air-gapped environments. You need to keep your sales engineers and solutions architects selling, not struggling with slow installs and over-long POCs.

- Deploy to kubernetes

- They support air-gapped installations

- They support deploying to On Premises and VPC customers

- Reduce installation and debug time

- They offer trial licensing and feature gating

install:

As the leader of field engineers or developers, you’re concerned about efficiently driving more revenue and increasing your addressable market. You want to meet your customers where they are and stop turning away business in on-prem, VPC, or air-gapped environments. You need to keep your sales engineers and solutions architects selling, not struggling with slow installs and over-long Proof-of Concepts (POCs.)

- With a tool they contribute to, kURL, you can quickly install kubernetes in a customer environment

- They have a cluster checklist and helm chart to do installs

- They make dealing with the k8s part as painless as possible

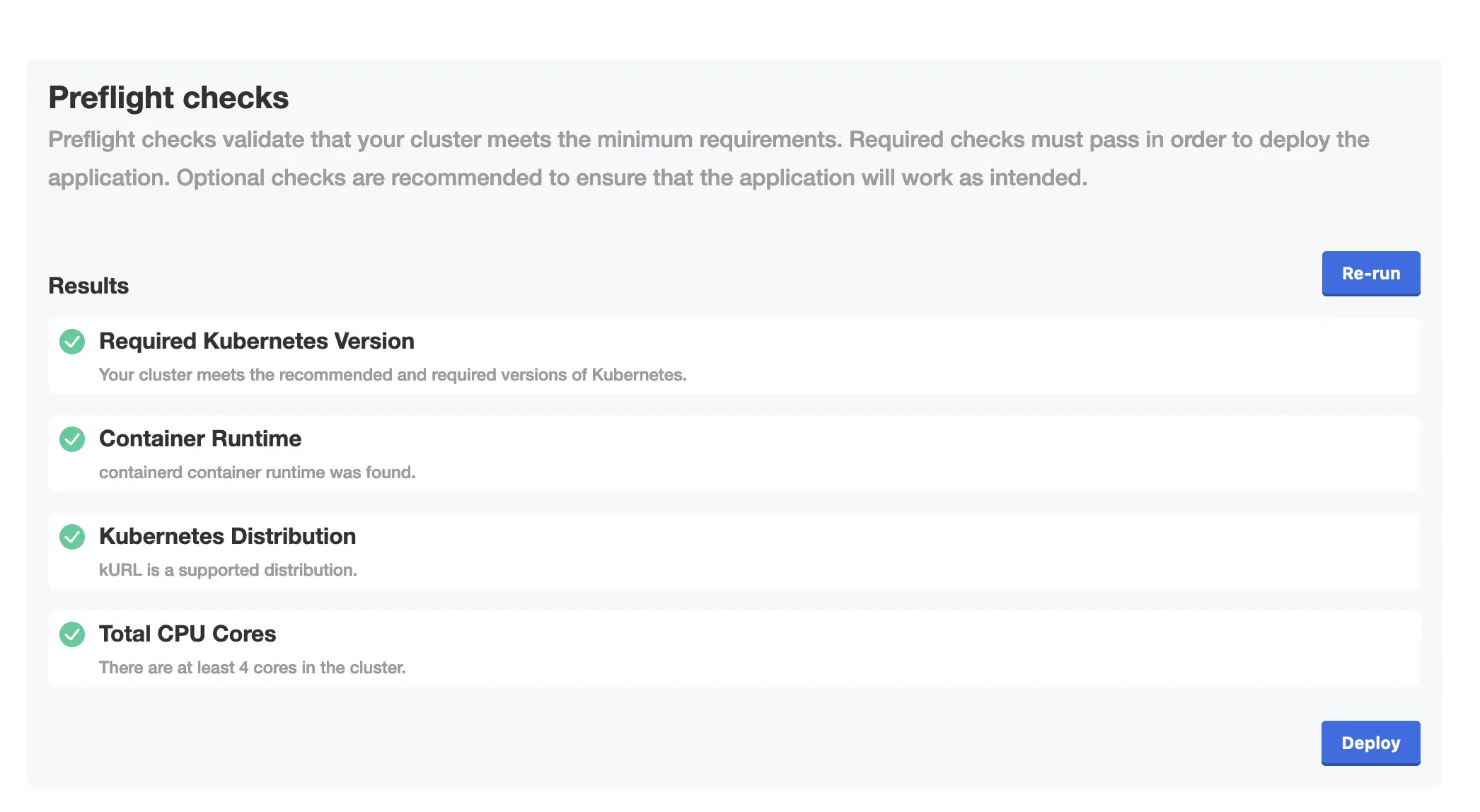

- Preflight checks, cluster creation, GUI, troubleshooting and reporting with Helm

support:

As a leader of technical support and customer success teams, you’re focused on resolving issues with your app, and wherever possible, making sure the have minimal impact. You want want your teams to be scale their efforts and solve problems faster, if not avoiding them entirely. You need tooling that helps your team capture, analyze, and codify solutions to common issues.

- They somehow 'reduce back and forth' to get debug info

- They can export log bundles

- They automate many k8s tasks so your staff shouldn't need Kubernetes knowledge

- Reproduce issues by creating similar infra quickly

Secure:

As a CISO or security practitioner, you’re concerned about delivering pervasive protection to prevent attacks and exposure of data. You want to help your customers lock down access and keep up-to-date on patches for CVEs. You need to give your customers control of their data, and distribute your app securely to environments they can control, even air-gapped locations.

- Automatic updates are possible

- Customer can host their own DB

- Customer can run Air Gapped

- Replicated becomes a branded part of the product to help the sales process

Summary

Without getting into the technical details yet, here is what I expect the product is doing:

- They have a process and team for setting up a Kubernetes cluster in various environments

- They help package a containerized application into a Helm chart and host it for you

- They have the ability to toggle features per customer

- They run an operator like FluxCD in the cluster to enable automatic updates

- They have a portal for managing the cluster remotely with features like exporting log bundles, viewing uptime, viewing usage and preforming kubernetes actions with GUI

Everything else is a reiteration of these five features. We'll see at the end how correct this was.

Problem Statements

Replicated's site has a large number of problems described. These offer insight into how Replicated views the market and how they're positioning their product. Here is a list of all the problems they state under each pillar of their product.

Build:

- Building your own app installer is an engineering quagmire

- Slow feature innovation and time to market

- Managing features and editions

- Keeping apps secure is hard

- Need to reduce attack surface area

- Runbooks don’t scale, are extremely complex, slow and error-prone

- Provisioning test environments

- Lack of visibility into app lifecycle

Sell:

- Missed revenue for modern K8s and cloud opportunities

- Can’t deliver to security-sensitive air-gapped locations

- Inability to deliver to on-prem and VPC customers

- SEs and SAs troubleshooting, not selling

- Managing licensing and entitlements

Install:

- Delays because your end customers don't have a K8s cluster available for new apps

- POCs and production installs in customer environments have a low success rate

- Too much engineering effort to troubleshoot difficult installs

- Insufficient K8s skills with your field staff or in your customers’ teams

- Distributing to many flavors of K8s, operating systems, and clouds

- Helm alone isn’t sufficient

Support:

- Customer support and success takes too much time and effort, and customers get frustrated

- Not meeting SLAs makes customers unhappy, after about 4 hours things start to get political

- Customers are running old versions of your software and having issues with them

- It’s difficult and expensive to hire and/or retain support staff with deep Kubernetes expertise

- Difficulty Reproducing Customer Issues

Secure:

- Customers are running old versions of your software and are at risk

- Your customers need help reducing attack surface area

- Can’t deliver to security-sensitive air-gapped locations

- Security audits by prospective customers slow down sales

Summary

-

Development and Distribution:

- Complex app installer development.

- Slow innovation and time-to-market.

- Challenges in managing features, security, versions, and entitlements.

- Inefficient runbooks, provisioning, and troubleshooting.

- Lack of visibility into app lifecycle.

- Missed revenue in K8s and cloud markets.

- Delays and low success rates in installations.

- Insufficient K8s expertise and distribution challenges.

-

Customer Support and Security:

- Time-consuming support and SLA challenges.

- Risk from outdated software versions.

- Need to reduce attack surface.

- Constraints in security-sensitive environments.

- Sales slowdown due to security audits.

So, what do we think Replicated sees in the market? I think they see all the same problems any Continuous Delivery company sees, as well as gaps in the security sensitive and kubernetes market. This certainly seems like a compelling world view.

I do find it interesting that they point out multiple times that lack of knowledge of Kubernetes is a problem. I will circle back to this at the end and question if they've solved this issue.

I also find the marketing content on security lack luster, although it usually is. Generally the marketing teams don't do a great job at describing security oriented features for whatever reason.

Technical Docs

Their resources dropdown has the following headings:

Architecture Tab

The Architecture tab seems like it would contain everything I want to know! Yet upon actually reading it, it's a guide for the architecture of a vendor's software for adopting replicated. In hind-sight this is generally more useful for their customers, so I shouldn't be surprised.

The recommendations boil down to:

- Follow the 12 Factor App approach

- Have basic CICD setup for your organization

- Package your application as a Helm chart

- Define a clear and concise installation and upgrade guide

I see this as a potential deviation from the promises made by the marketing department. It's starting to sound like they're not abstracting all that much from working with Kubernetes.

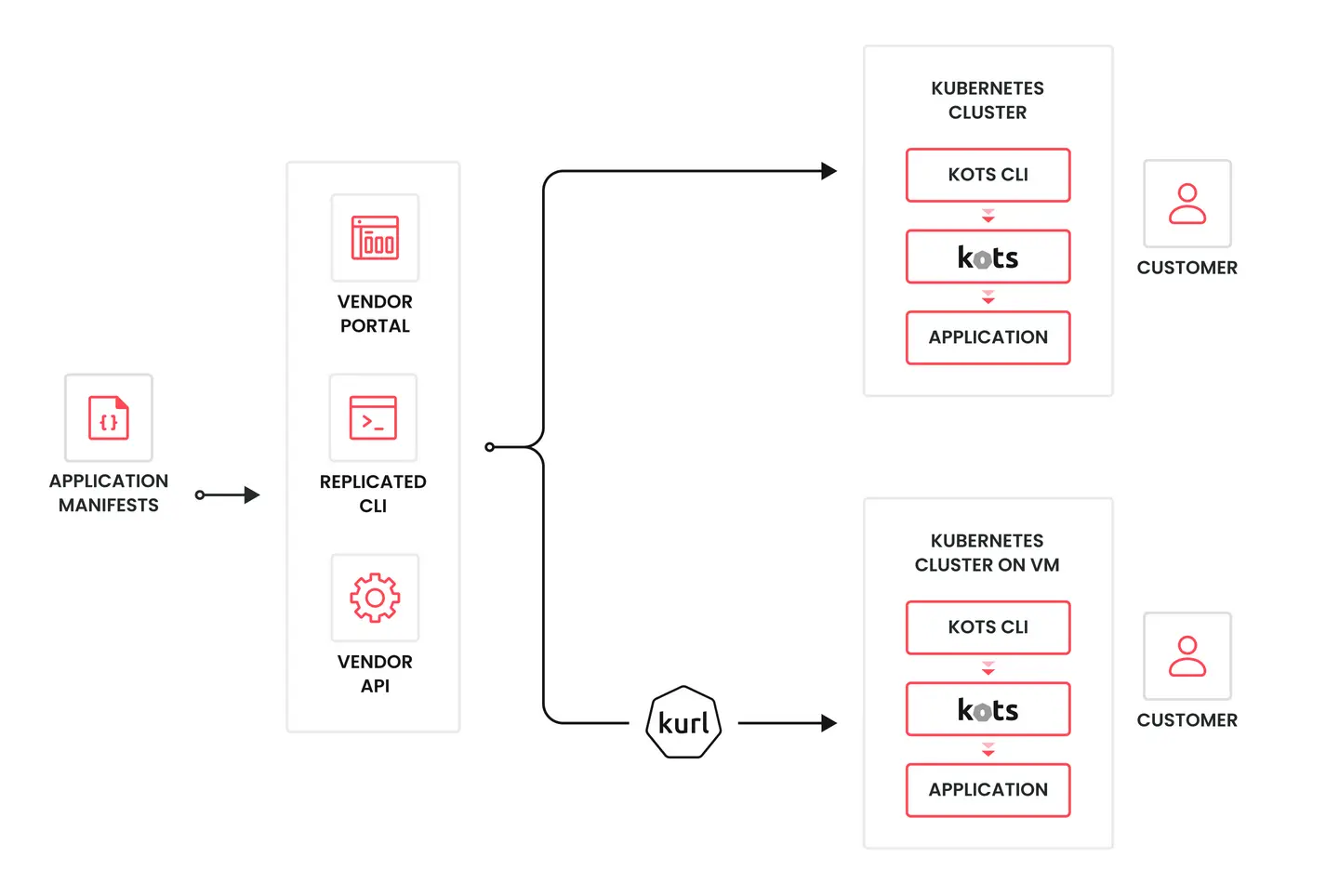

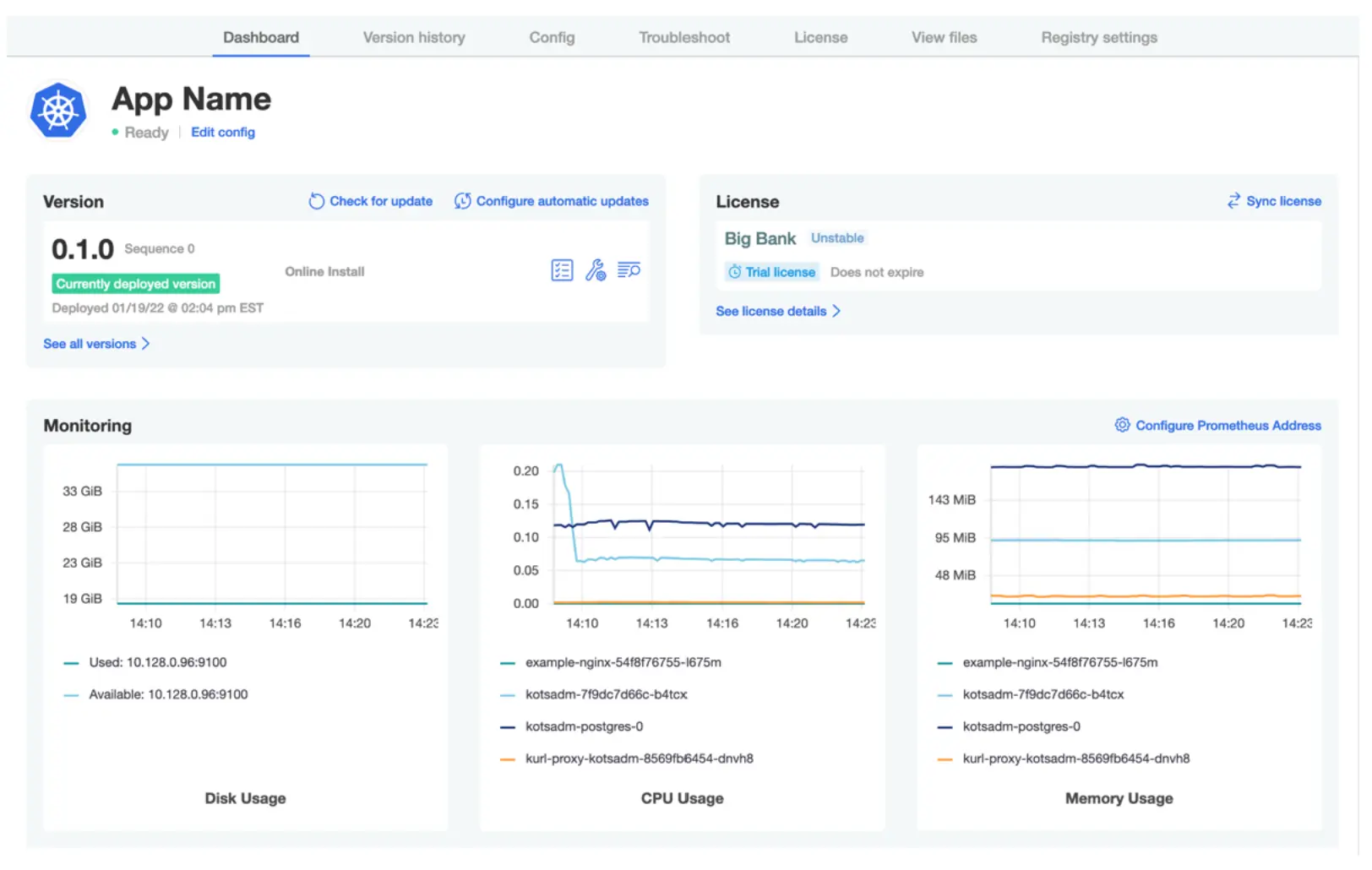

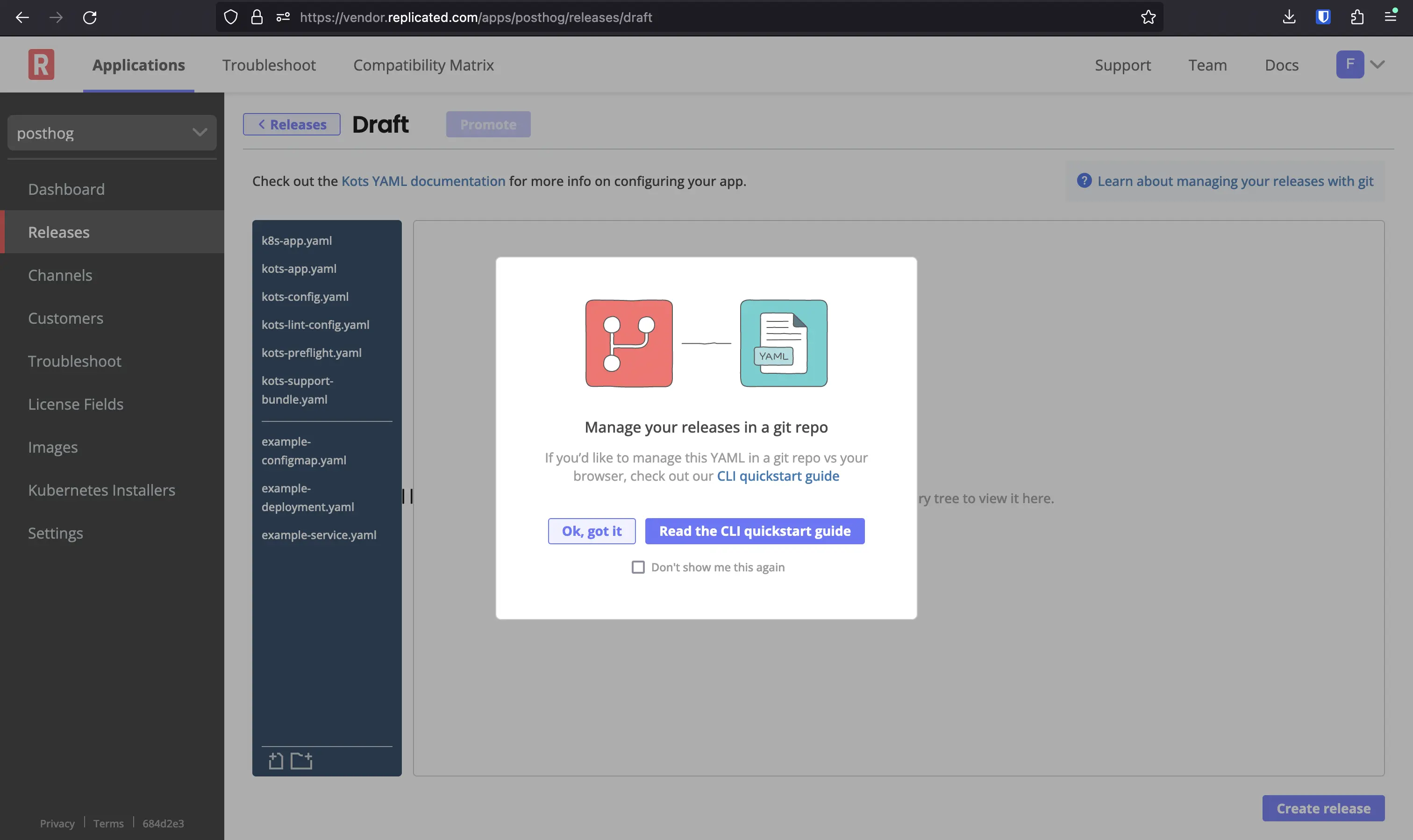

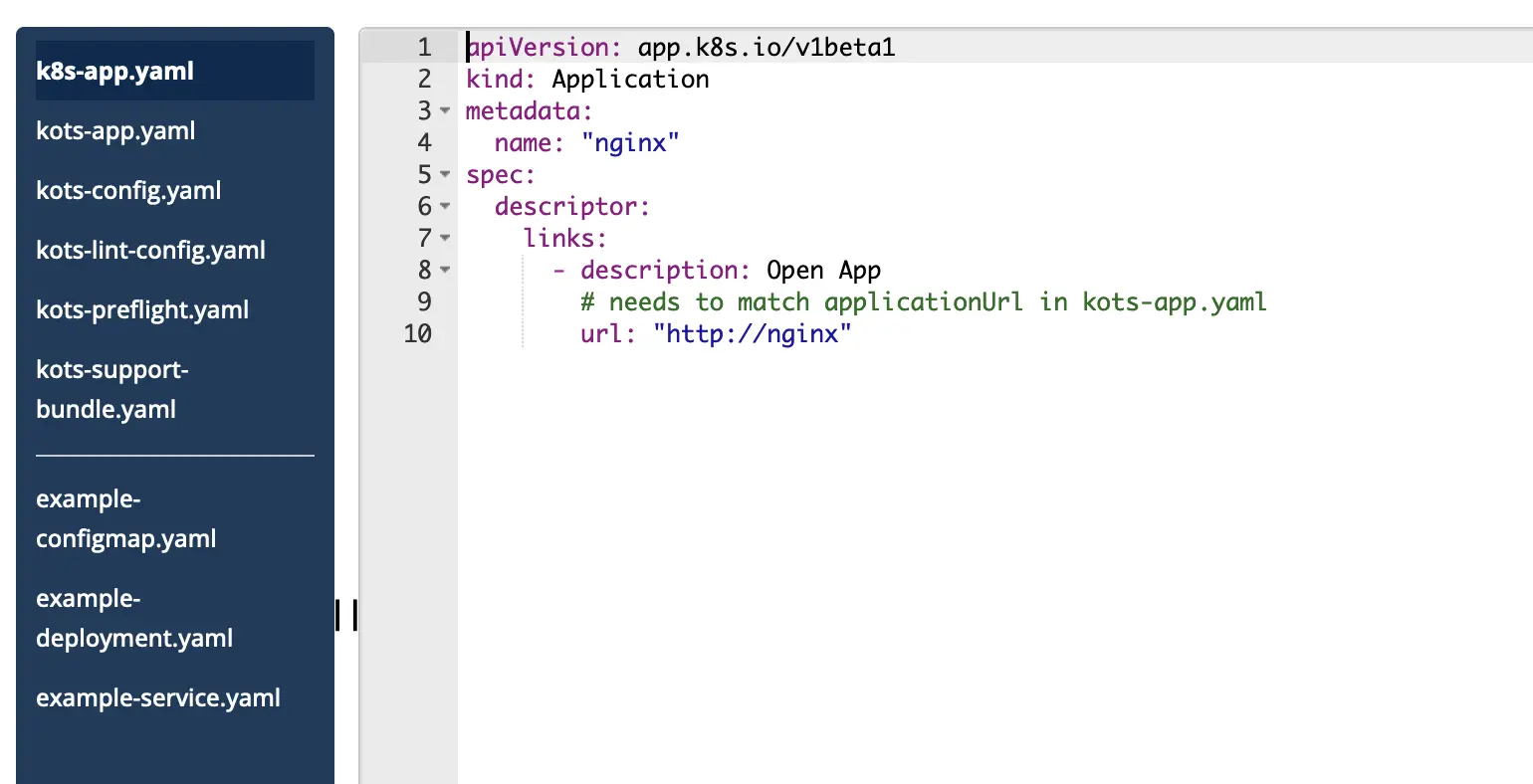

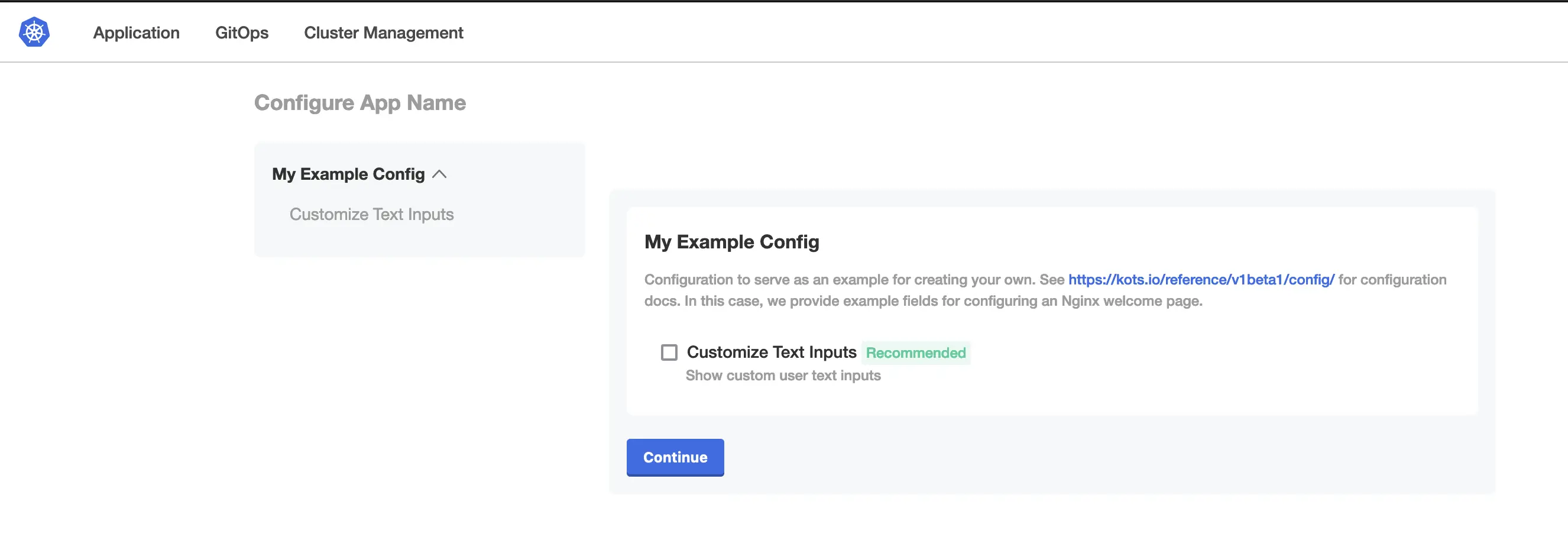

Also, it's now clear that the Core of Replicated's product is KOTS. This is the agent that sits in the cluster to allow deployments of Helm charts, export data, preforms license checks and allows update automation.

Documentation Tab

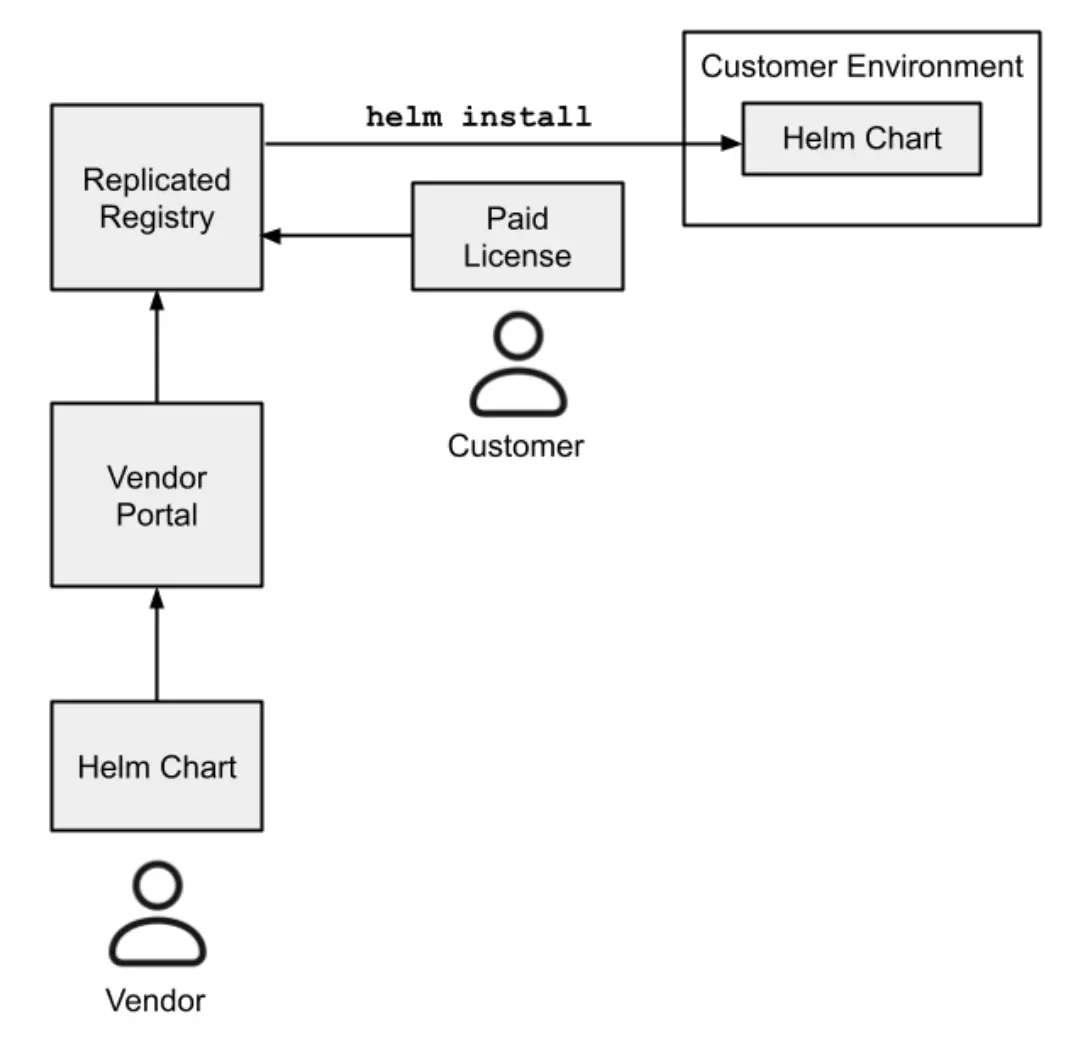

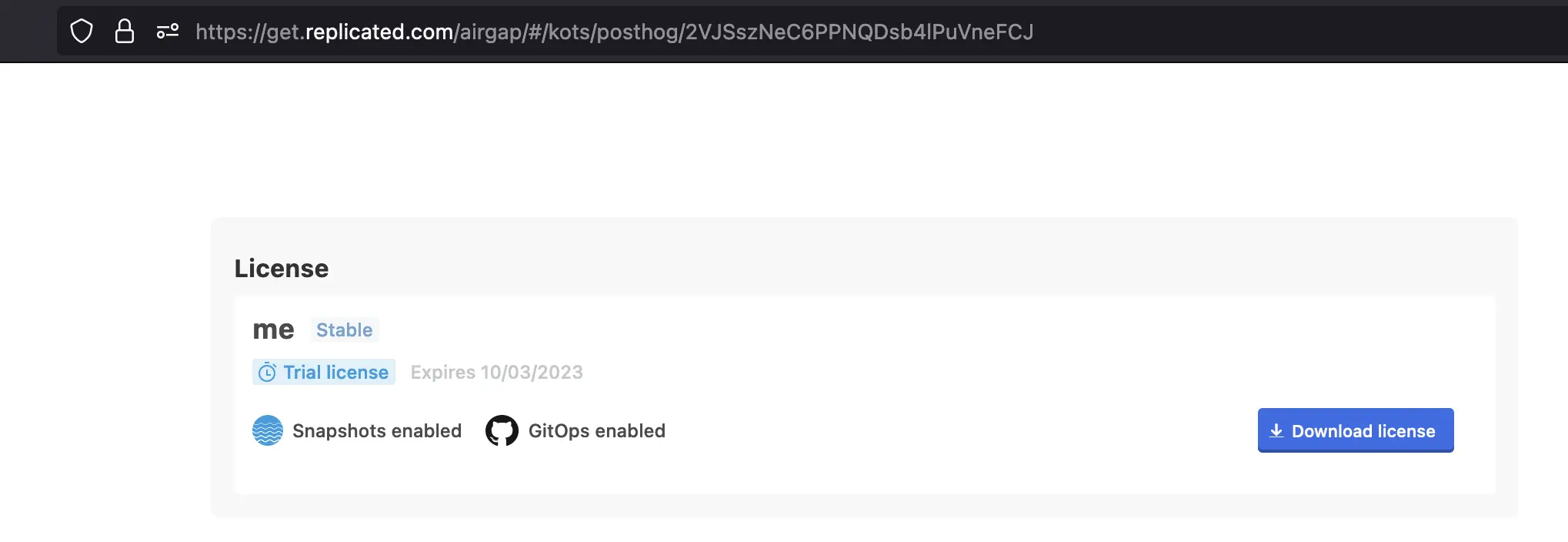

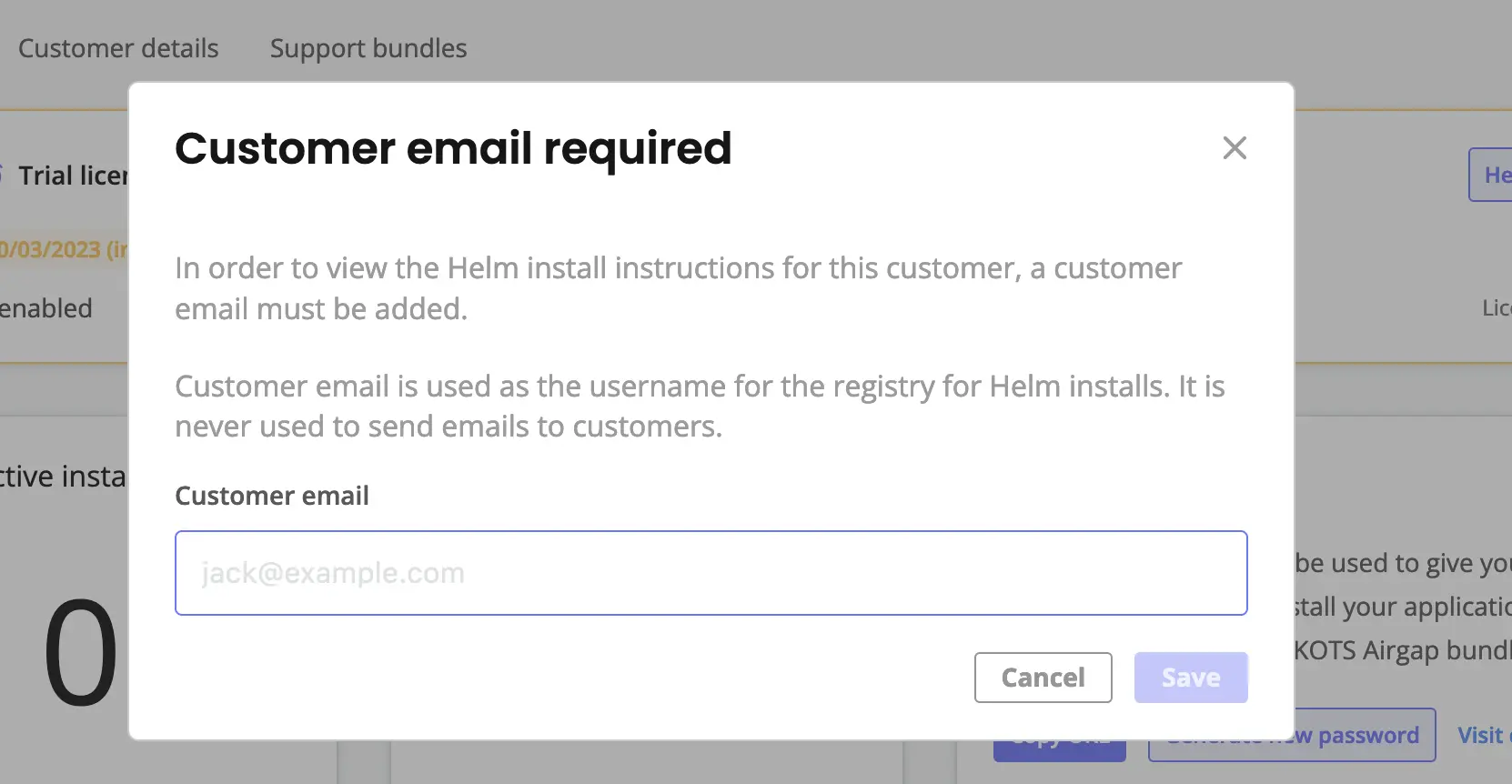

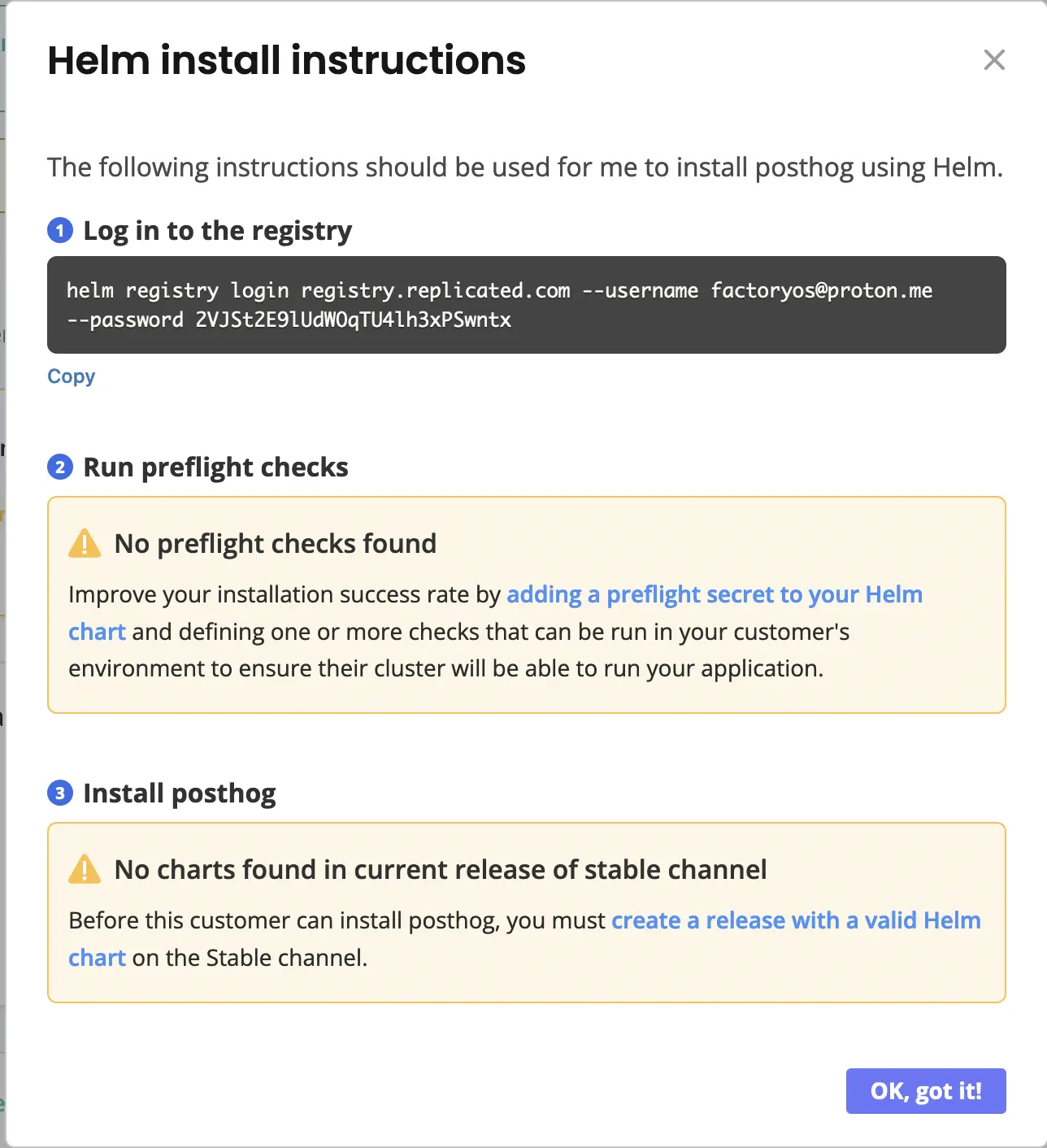

The first section is 'Distribute and Support'. There're two methods they support, Vanilla Helm or KOTS. KOTS is also delivering a Helm chart but also providing some more features along-side it, and making it slightly easier for a customer to connect to the Helm Registry.

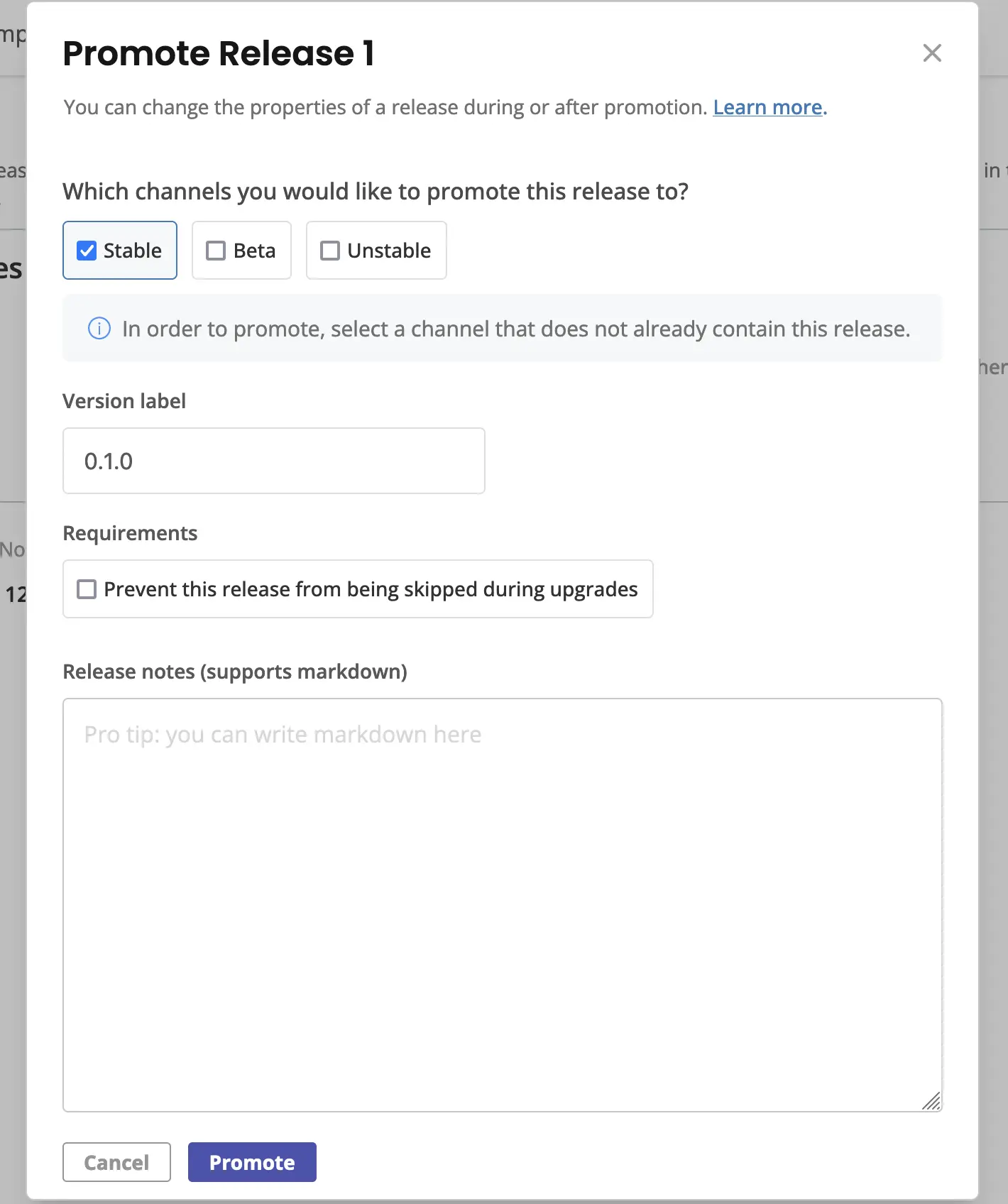

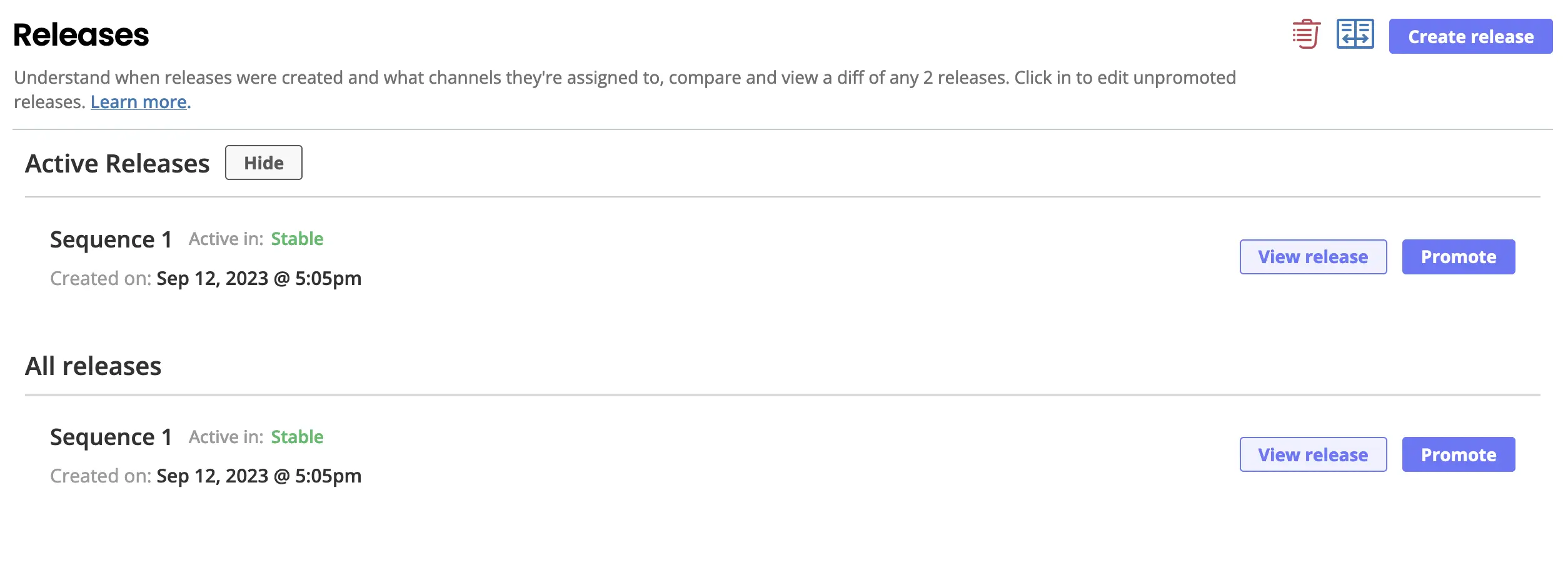

This diagram shows that a Vendor can upload their Helm Chart to the Vendor Portal, which Replicated hosts in their OCI Registry. Then an end customer can buy a license, which essentially grants access to the registry. The customer can then use helm install to install the Helm chart into their existing Kubernetes cluster.

There're limitations if you choose to use vanilla Helm instead of it wrapped in KOTS:

- No air gapped environments supported

- Backup and Restore not supported

- Admin console is not available

- Preflight checks that block installation are not available

Thus, I assume they would try to do most distributions with KOTS:

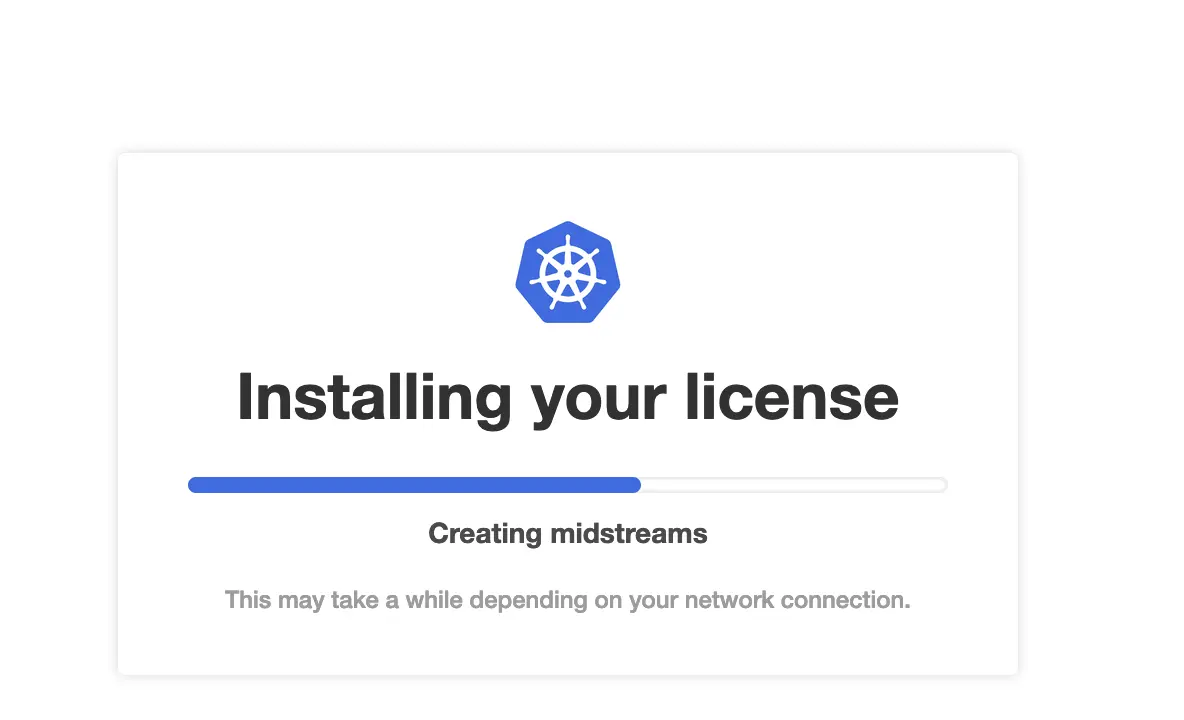

Like the Helm Approach, it all starts with the Vendor uploading their Helm chart and containers to the Replicated Vendor portal.

Then if the customer does not already have a cluster, one is created on a VM using kurl.

Once they have a cluster, they install kots.

Finally, they can run a KOTS command to connect to Replicated's portal and deploy the Helm Chart.

Replicated has an SDK which is installed as a Helm Chart along side your application Helm chart. This enables embedding replicated features into your application such as:

- operational telemetry

- custom metrics

- license entitlements at runtime

- update checks to alert customers of new versions.

I do like that the SDK is optional. You can start by getting your application running and then go through deeper integration if everything is stable.

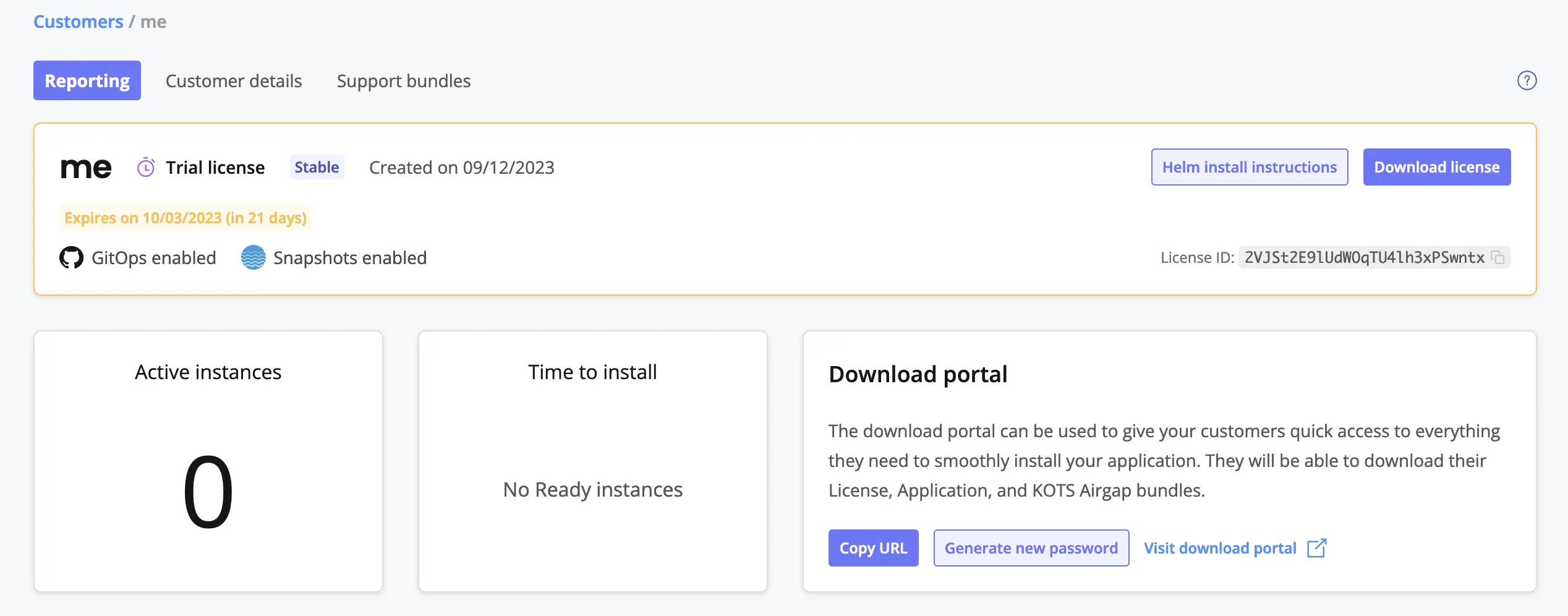

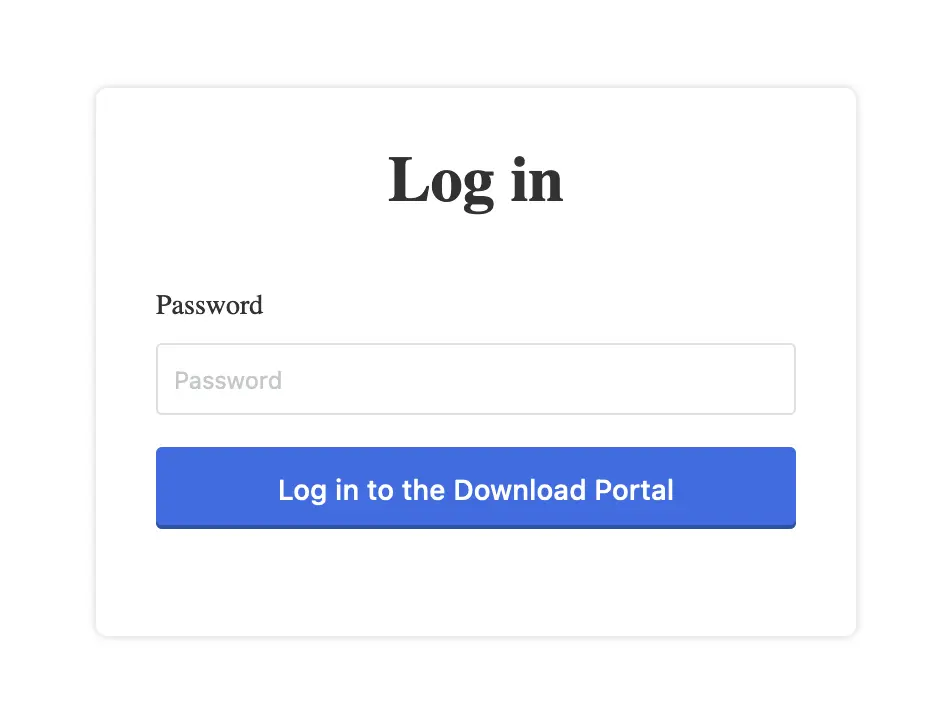

Vendor Portal

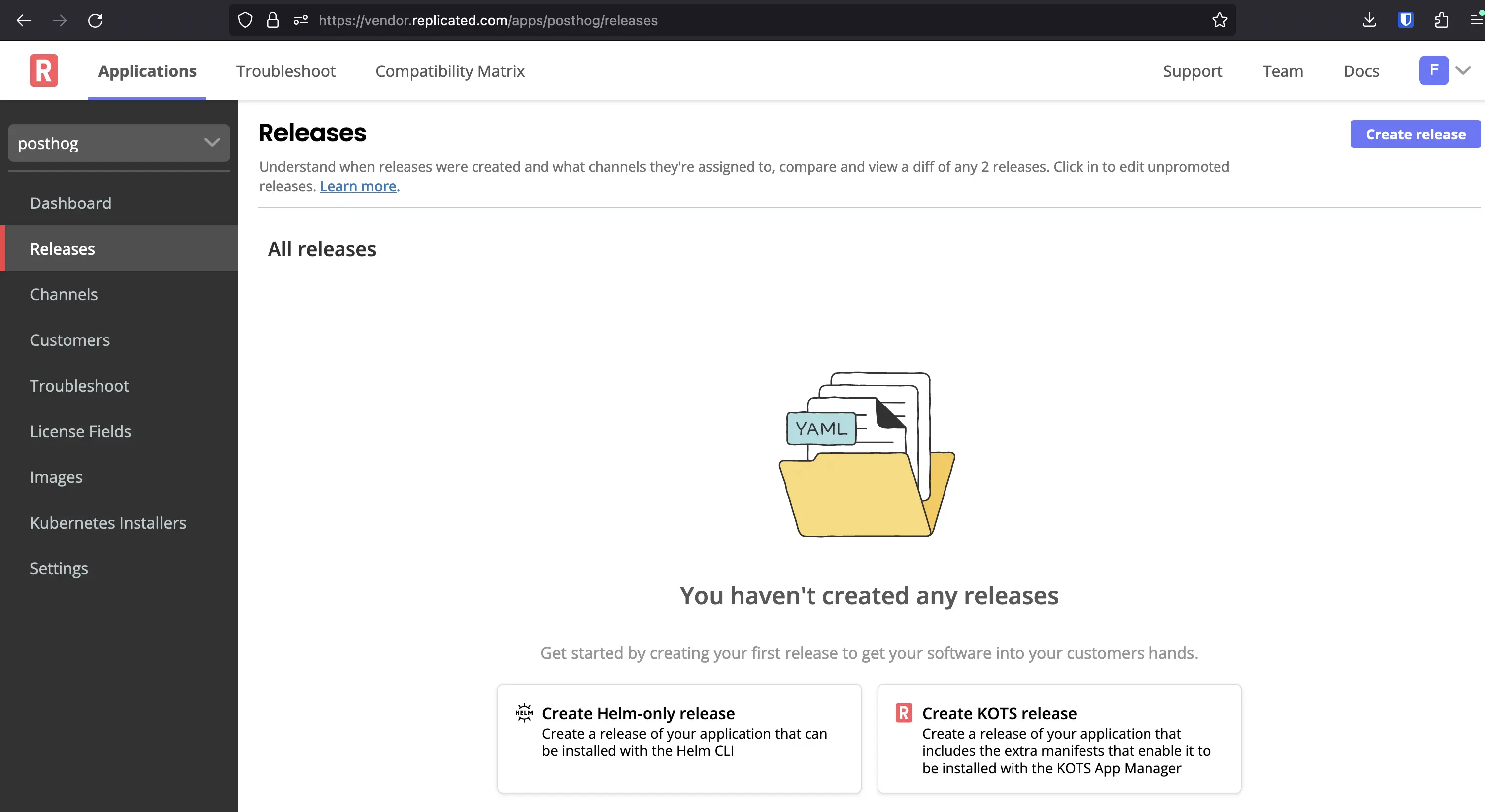

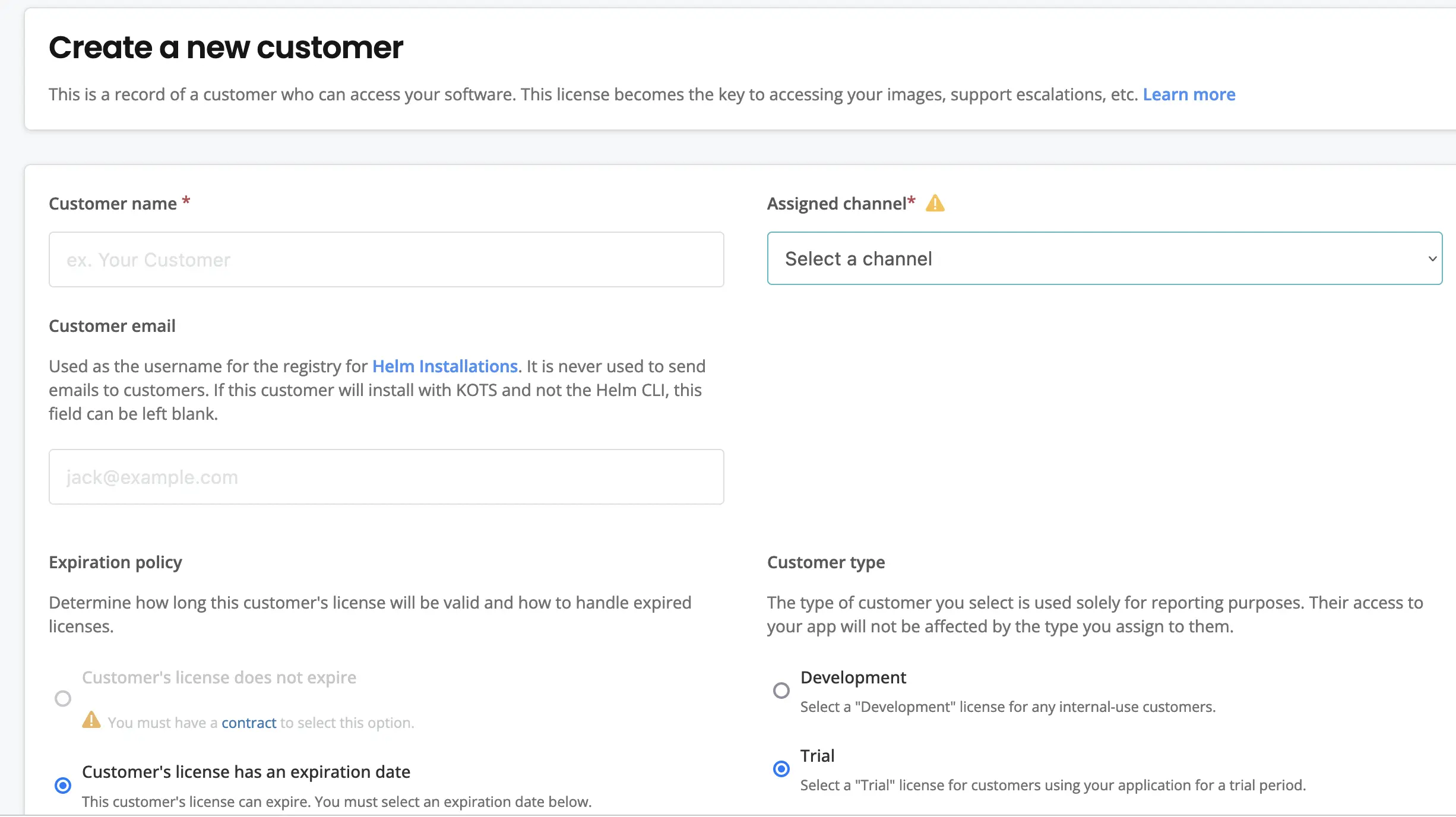

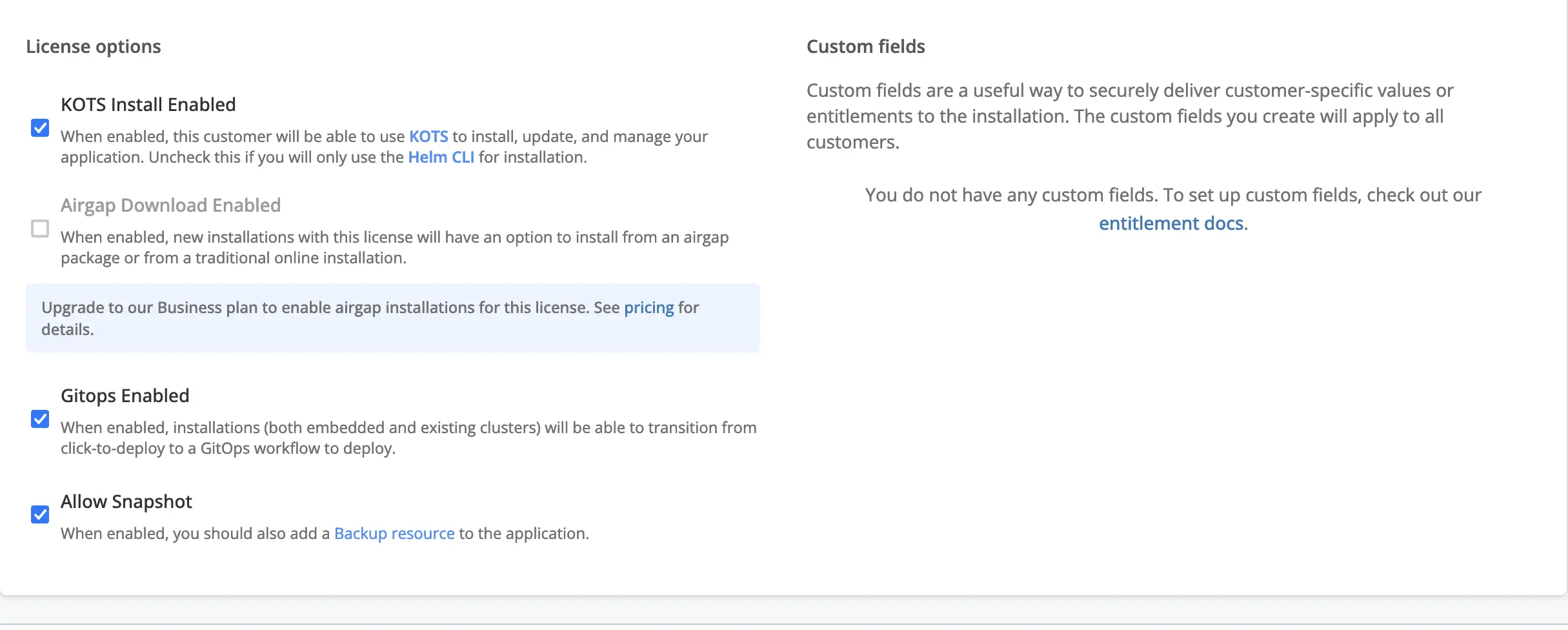

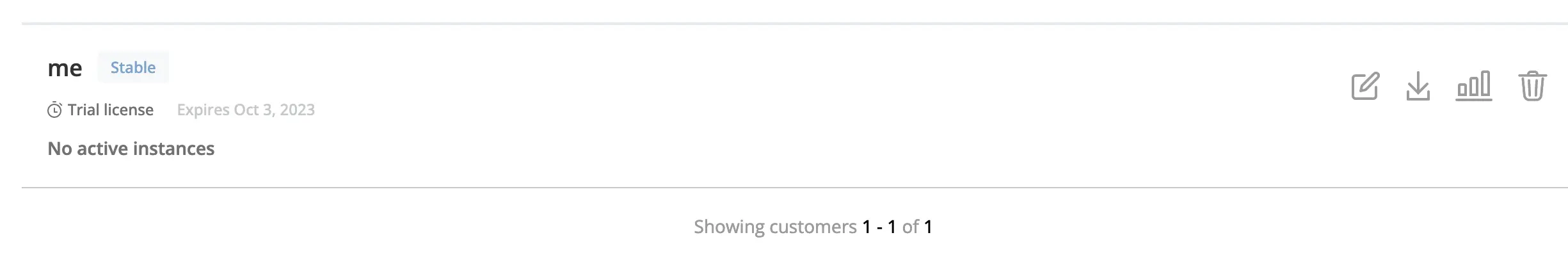

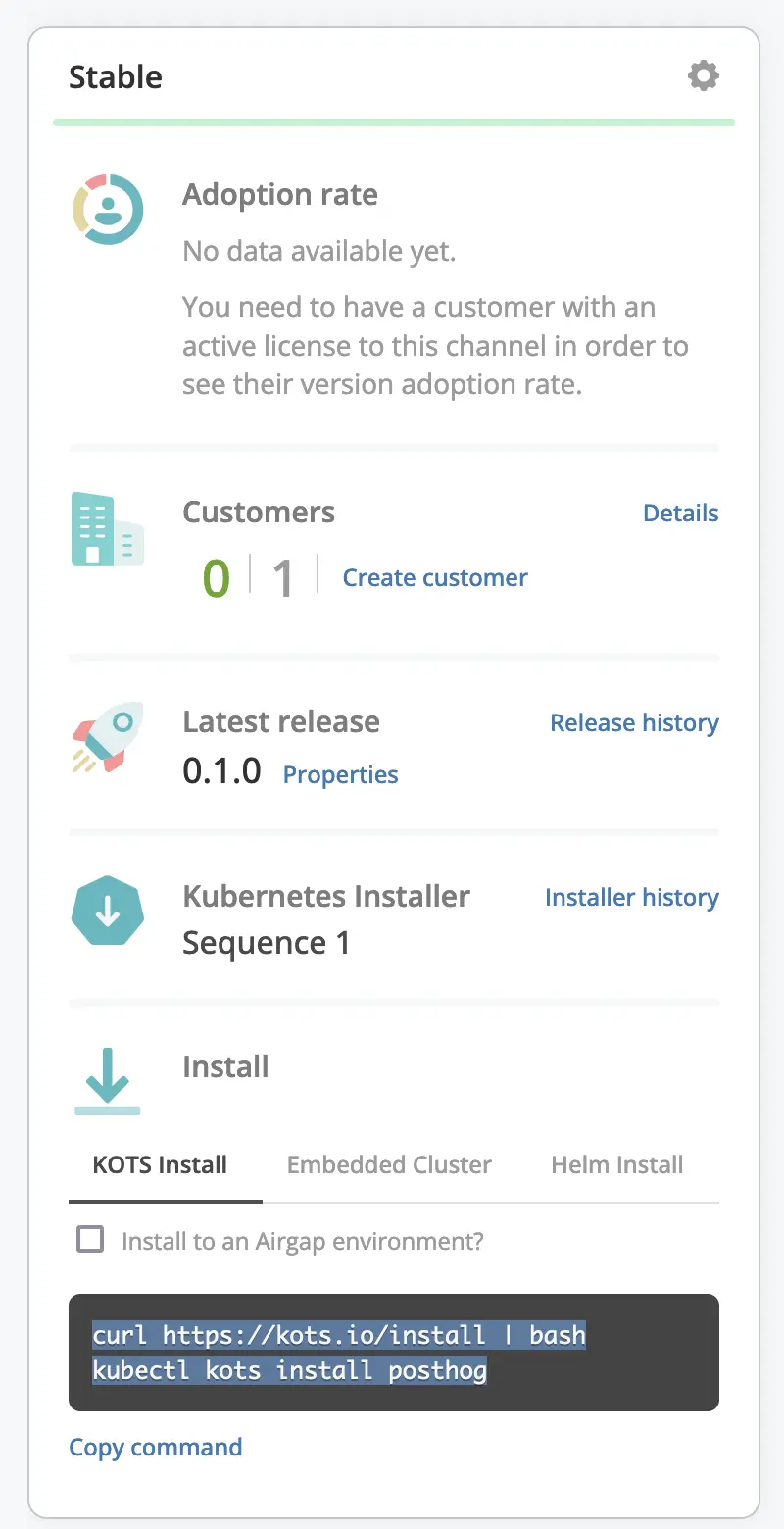

A vendors main gateway into Replicated's system is the vendor portal. It's a web app with generic RBAC features for team access and creation which allows:

- Creating Applications

- Channel and Release management

- Customer Licenses

- Private Registries

- Custom Domains (to alias Replicated's registry for marketing/SEO)

- Preflight checks

- Support Bundles

- Compatibility Testing

- CICD Integrations

- Insights and Telemetry

I read through all this content, most of the features are self explanatory in my opinion, which is a good thing! There were still a few things that struck me as interesting.

I like how they architected kURL.sh + KOTS. It sounds like they've abstracted appropriately to get out of the way of your validation logic without forcing too much of a structure. I do love me some operator pattern.

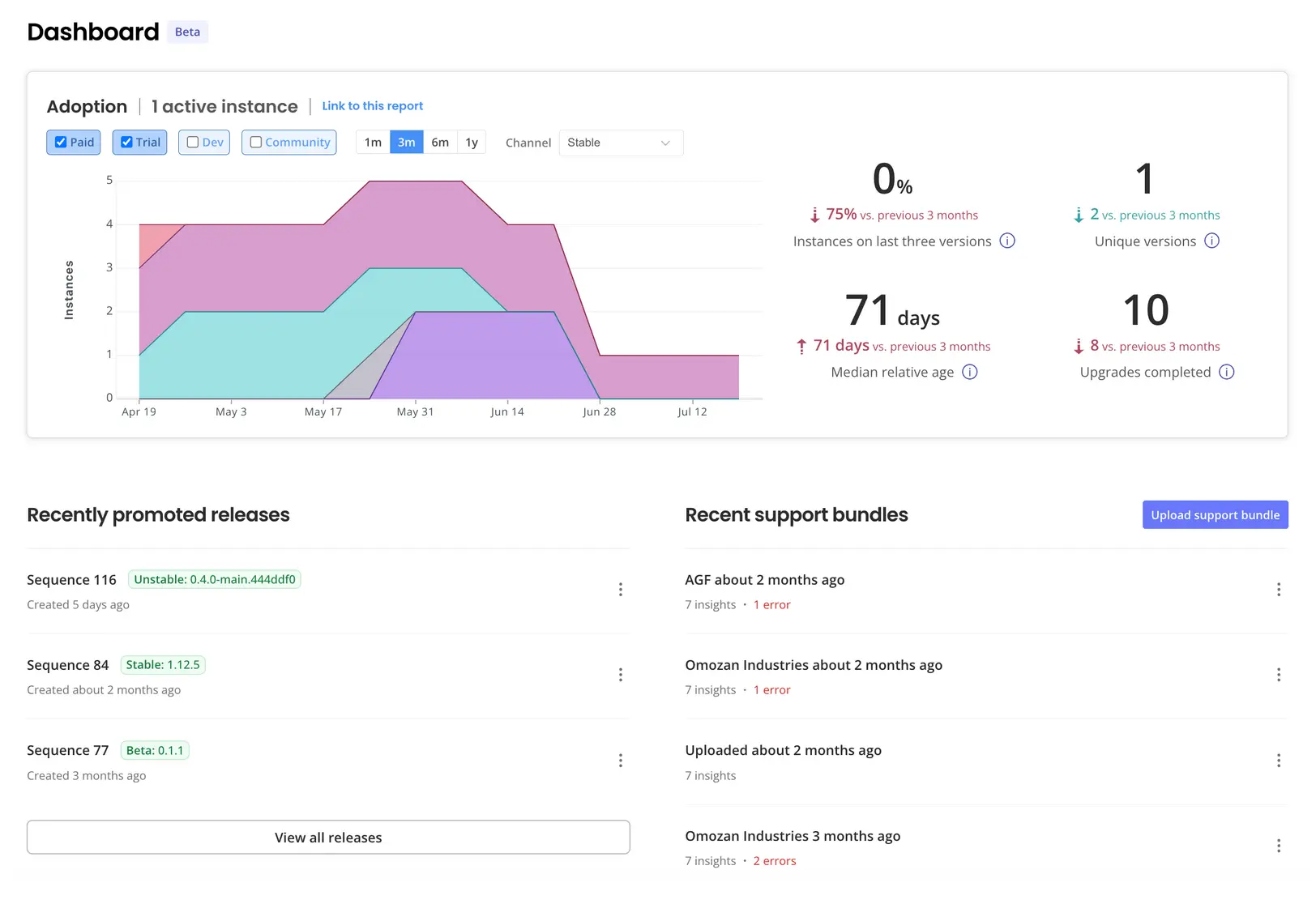

The Adoption Report seems like an insightful feature, certainly nice to have.

The KOTS dashboard provides what I would argue is near the minimal viable set of features:

Summary

Reading through the docs we learned:

KOTS/kURL.shis the core of their product- Your application is packaged as a Helm Chart

- Most of their features depend on the

KOTSoperator being able to reach the internet - An SDK can be integrated into your application to give more functionality

- The Replicated platform is an OCI registry with supporting features

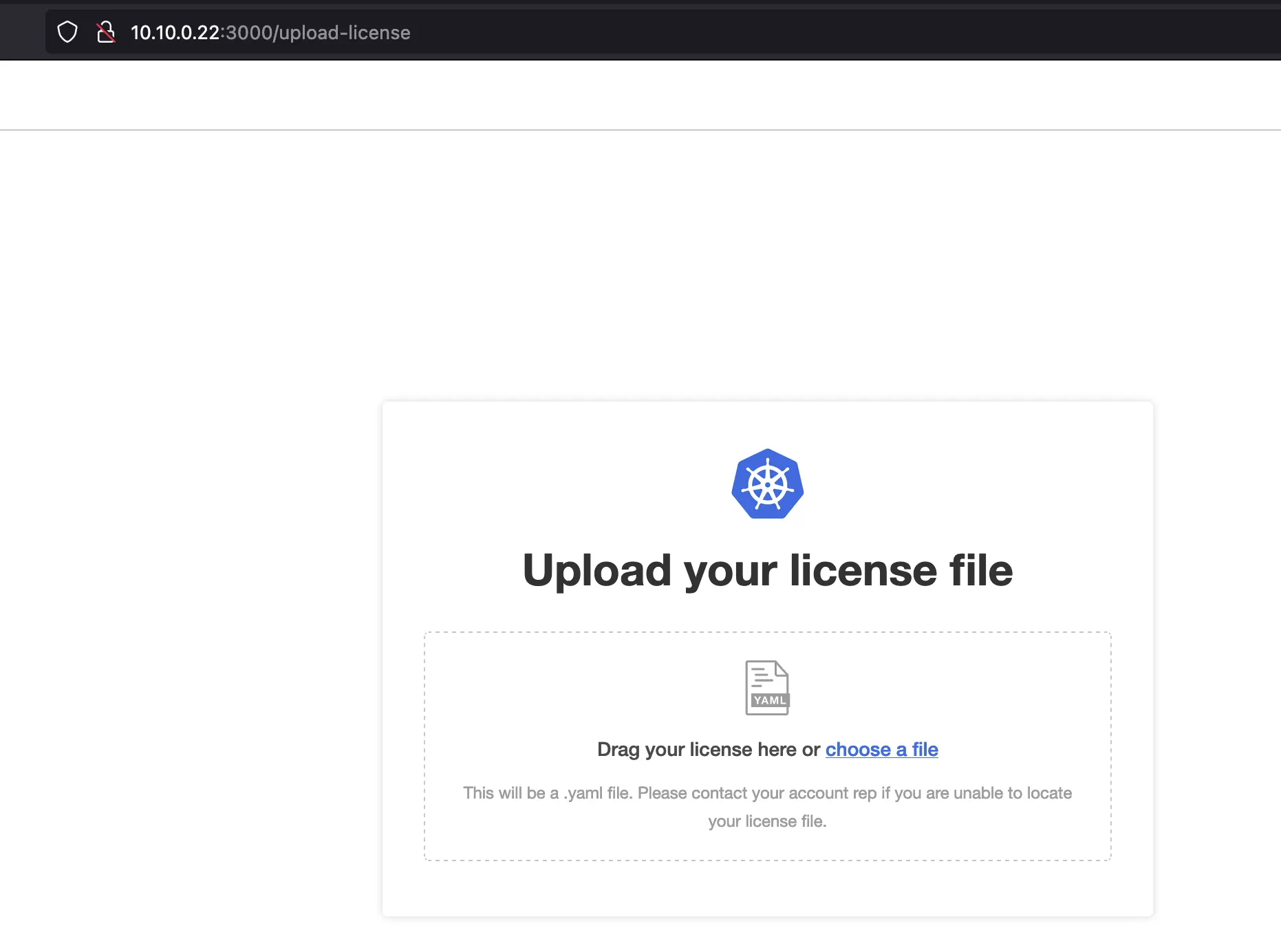

Replicated Demo

Armed with a decent understanding of what the product does and the major components in its architecture, it's time to go through a self-serve demo.

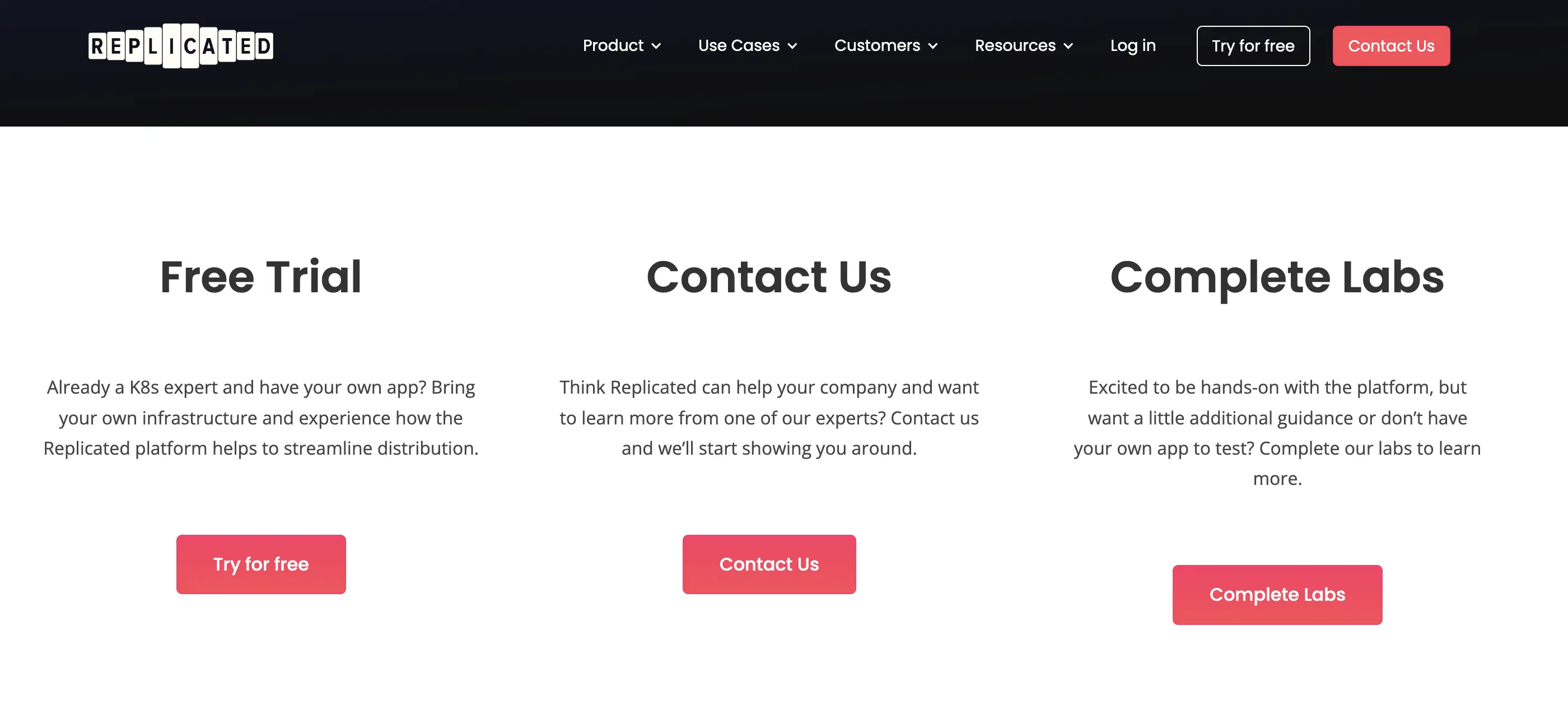

There're three routes to trying the product:

- If you already have a k8s cluster, and an App, you can start a Trial

- Contact Them if you need more guidance

- Start with labs to learn as a guided path for option 1

I'll go through route 1. I actually destroyed my Kubernetes cluster because the storage was too unreliable, so I'll have to spin up a cluster as well. It's on my todo list to deploy Posthog so I'll use that as my application to deploy.

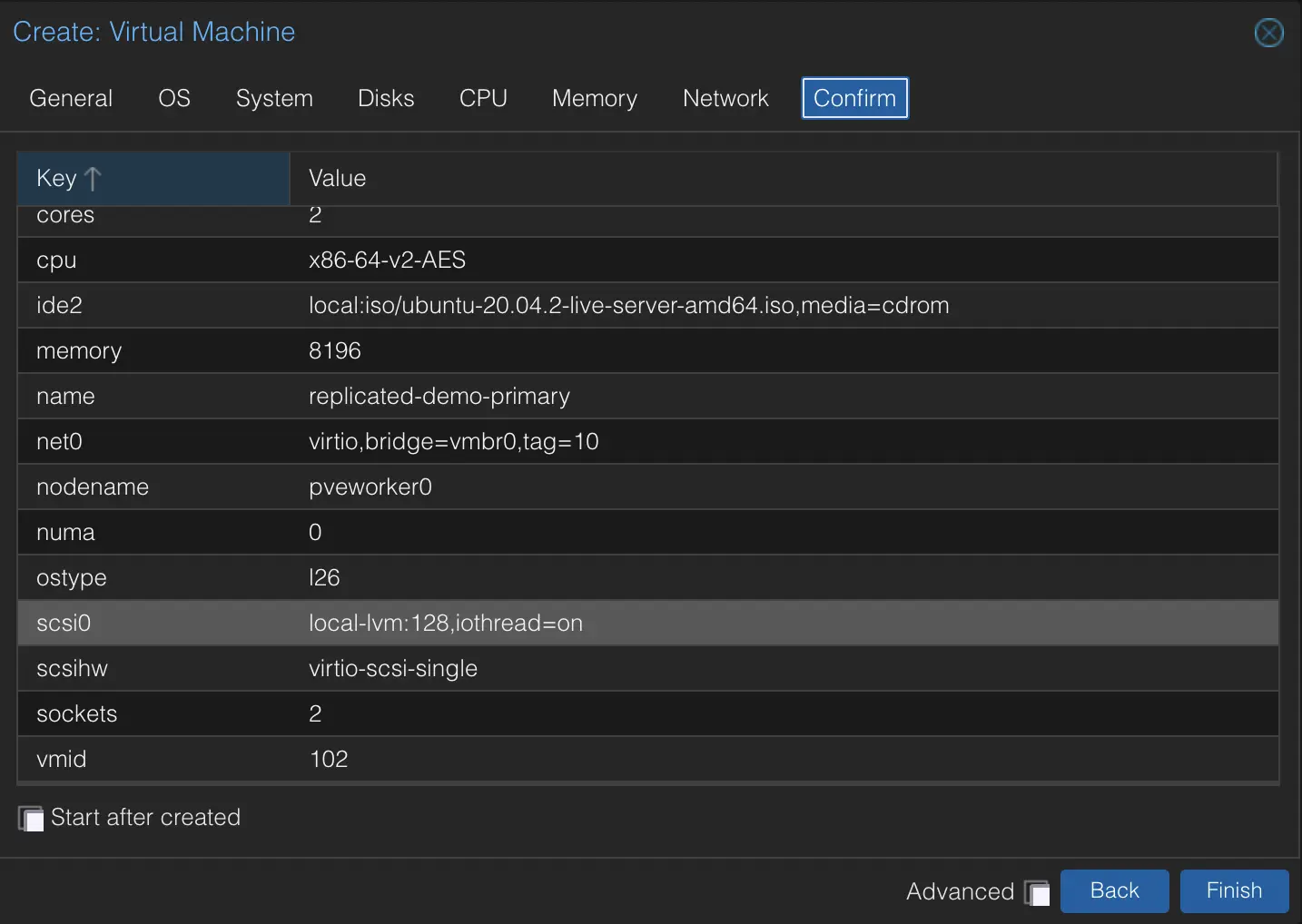

Step 1 - Create a Kubernetes Cluster

Because I'm using Replicated, I'll use their docs for deploying a Kubernetes cluster on my Proxmox environment rather than doing what I would usually do.

Their suggested approach is using kURL which is based on kubeadm, the tool I would have used anyway! kURL is a kubernetes distribution creator, meaning it can bundle up all the configurations and settings you want or need for your application and deliver them onto a machine as an installer. Pretty cool! In this case though I don't have my application packed yet so we'll just setup a cluster.

kURL is a framework for declaring the layers that exist before and after the services that kubeadm provides.

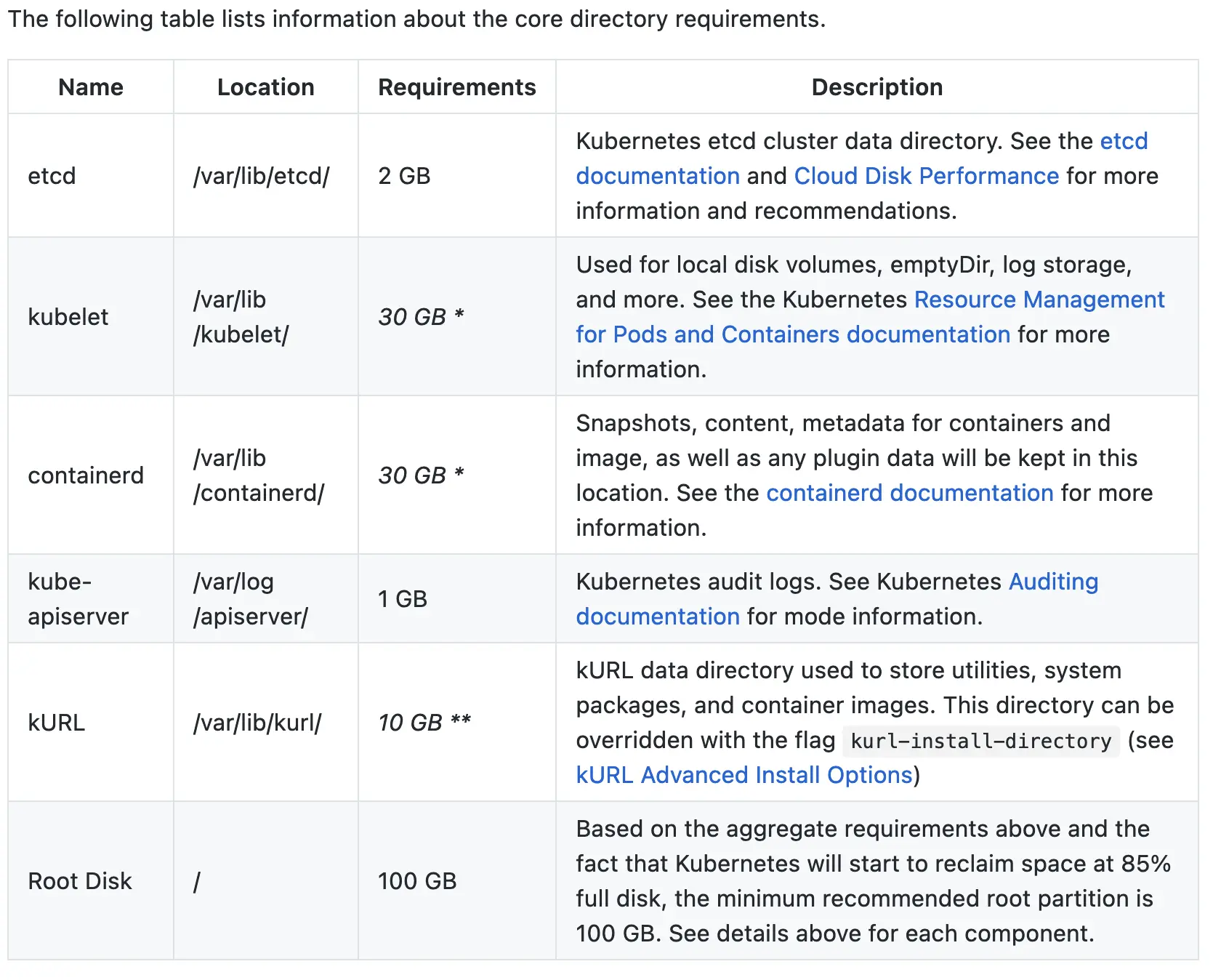

System requirements are defined as:

- Linux OS

- 4 64bit AMD cores

- 8GB RAM

- 100GB Disk

TCP ports 2379, 2380, 6443, 10250, 10257 and 10259 and UDP port 8472 (Flannel VXLAN) open between cluster nodes- They define a nice partition table for disk requirements

- They define storage and partition requirements for any Add-Ons you want to include: Registry for Air-Gapped, Docker, Rook, Ceph

- They define a firewall rule chart for installation and execution

- There's a section on High Availability containing extra firewall rules

kURL works well for Air Gapped installations with everything required being packed into a .tar.gz file that once extracted handles everything. Presumably you can bundle your whole application into the installer and ship away!

For me testing this out I want a single node cluster, luckily the first install creates the Primary untainted so it can cleanly become a single node cluster. read more on that here.

The first thing I need then, is a linux VM to start with:

I went through the installer, updated the system and configured OpenSSH for remote access. I did nothing else. This is a completely fresh install.

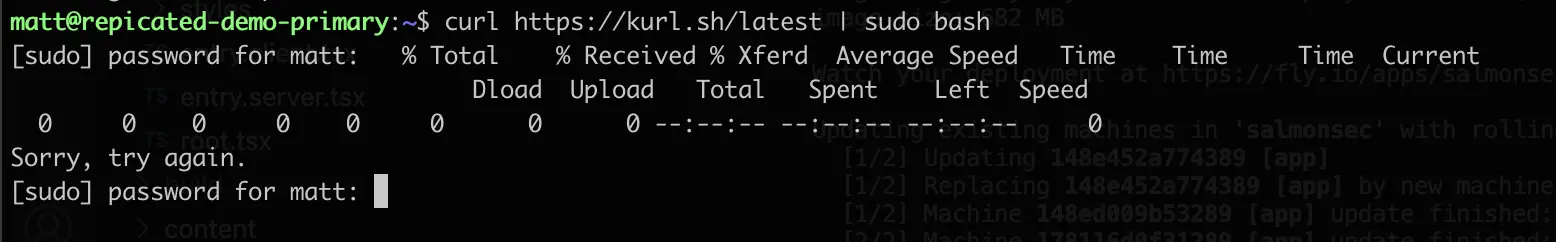

After that, the docs say I can run:

curl https://kurl.sh/latest | sudo bash

So, I did that!

kURL Inspection

kURL asked for root permissions so I had to downloaded the script first and read through what it was going to do on my system. This wound up being time well spent.

First, I looked at other network operations the script was going to do. cat test.sh | grep curl:

curl -LO "$url"

printf "\n${GREEN} curl -LO %s${NC}\n\n" "$(get_dist_url)/$package_name"

printf "\n${GREEN} curl -LO %s${NC}\n\n" "$bundle_url"

newetag="$(curl -IfsSL "$package_url" | grep -i 'etag:' | sed -r 's/.*"(.*)".*/\1/')"

curl -fL -o "${filepath}" "${url}" || errcode="$?"

curl -sSOL "$(get_dist_url)/common.tar.gz"

curl -sSOL "$(get_dist_url)/common.tar.gz" || curl -sSOL "$(get_dist_url_fallback)/common.tar.gz"

local curl_flags=

curl_flags=" -x ${proxy_address}"

curl_flags=" -x ${proxy_https_address}"

echo "curl -fsSL${curl_flags} ${kurl_url}/version/${kurl_version}/${installer_id}/"

echo "curl -fsSL${curl_flags} ${kurl_url}/${installer_id}/"

# Usage: cmd_retry 3 curl --globoff --noproxy "*" --fail --silent --insecure https://10.128.0.25:6443/healthz

_out=$(curl --noproxy "*" --max-time 5 --connect-timeout 2 -qSfs -H 'Metadata-Flavor: Google' http://169.254.169.254/computeMetadata/v1/instance/network-interfaces/0/access-configs/0/external-ip 2>/dev/null)

_out=$(curl --noproxy "*" --max-time 5 --connect-timeout 2 -qSfs http://169.254.169.254/latest/meta-data/public-ipv4 2>/dev/null)

_out=$(curl --noproxy "*" --max-time 5 --connect-timeout 2 -qSfs -H Metadata:true "http://169.254.169.254/metadata/instance/network/interface/0/ipv4/ipAddress/0/publicIpAddress?api-version=2017-08-01&format=text" 2>/dev/null)

curl -fsSL -X PUT \

curl -fsSL -I \

curl --globoff --noproxy "*" --fail --silent --insecure "http://${IP}" > /dev/null

curl --fail $LICENSE_URL || bail "Failed to fetch license at url: $LICENSE_URL"

curl -s --output /dev/null -H 'Content-Type: application/json' --max-time 5 \

curl -s --output /dev/null -H 'Content-Type: application/json' --max-time 5 \

curl -s --output /dev/null -H 'Content-Type: application/json' --max-time 5 \

curl -s --output /dev/null -H 'Content-Type: application/json' --max-time 5 \

curl -s --output /dev/null -H 'Content-Type: application/json' --max-time 5 \

curl -s --output /dev/null -H 'Content-Type: application/json' --max-time 5 \

curl -s --output /dev/null -H 'Content-Type: application/json' --max-time 5 \

curl -s --output /dev/null -H 'Content-Type: application/json' --max-time 5 \

curl 'https://support-bundle-secure-upload.replicated.com/v1/upload' \

logWarn "$ curl <installer>/task.sh | sudo bash -s remove_rook_ceph, i.e.:"

logWarn "curl https://kurl.sh/latest/tasks.sh | sudo bash -s remove_rook_ceph"

curl --globoff --noproxy "*" --fail --silent --insecure "https://$(kubernetes_api_address)/healthz" >/dev/null

So it's probably downloading some binaries with curl -sSOL "$(get_dist_url)/common.tar.gz", I reconstructed the result of get_dist_url and downloaded the tarball.

# snippet from kURL.sh

DIST_URL="https://s3.kurl.sh/dist"

KURL_VERSION="v2023.09.12-0"

...

function get_dist_url() {

local url="$DIST_URL"

if [ -n "${KURL_VERSION}" ]; then

url="${DIST_URL}/${KURL_VERSION}"

fi

echo "$url"

}

# End snippet

$ curl -sSOL https://s3.kurl.sh/dist/v2023.09.12-0/common.tar.gz

$ tar -xzvf common.tar.gz

kustomize/

kustomize/kubeadm/

kustomize/kubeadm/init-orig/

kustomize/kubeadm/init-orig/kubelet-args-pause-image.patch.tmpl.yaml

kustomize/kubeadm/init-orig/patch-kubelet-container-log-max-files.tpl

kustomize/kubeadm/init-orig/patch-kubelet-max-pods.tmpl.yaml

kustomize/kubeadm/init-orig/patch-kubelet-cis-compliance.yaml

kustomize/kubeadm/init-orig/patch-kubelet-21.yaml

kustomize/kubeadm/init-orig/patch-load-balancer-address.yaml

kustomize/kubeadm/init-orig/patch-kubelet-system-reserved.tpl

kustomize/kubeadm/init-orig/patch-kubelet-container-log-max-size.tpl

kustomize/kubeadm/init-orig/kubelet-config-v1beta1.yaml

kustomize/kubeadm/init-orig/patch-kubelet-eviction-threshold.tpl

kustomize/kubeadm/init-orig/kubeadm-init-config-v1beta3.yml

kustomize/kubeadm/init-orig/patch-public-and-load-balancer-address.yaml

kustomize/kubeadm/init-orig/kustomization.yaml

kustomize/kubeadm/init-orig/patch-public-address.yaml

kustomize/kubeadm/init-orig/audit.yaml

kustomize/kubeadm/init-orig/patch-kubelet-reserve-compute-resources.tpl

kustomize/kubeadm/init-orig/pod-security-policy-privileged.yaml

kustomize/kubeadm/init-orig/kubeadm-init-hostname.patch.tmpl.yaml

kustomize/kubeadm/init-orig/kubeadm-cluster-config-v1beta3.yml

kustomize/kubeadm/init-orig/kubeadm-cluster-config-v1beta2.yml

kustomize/kubeadm/init-orig/kubeadm-init-config-v1beta2.yml

kustomize/kubeadm/init-orig/kubeproxy-config-v1alpha1.yml

kustomize/kubeadm/init-orig/patch-kubelet-pre21.yaml

kustomize/kubeadm/init-orig/patch-certificate-key.yaml

kustomize/kubeadm/init-orig/patch-cluster-config-cis-compliance.yaml

kustomize/kubeadm/init-orig/patch-cluster-config-cis-compliance-insecure-port.yaml

kustomize/kubeadm/join-orig/

kustomize/kubeadm/join-orig/kubelet-args-pause-image.patch.tmpl.yaml

kustomize/kubeadm/join-orig/kubeadm-join-config-v1beta3.yaml

kustomize/kubeadm/join-orig/kubeadm-join-hostname.patch.tmpl.yaml

kustomize/kubeadm/join-orig/kubeadm-join-config-v1beta2.yaml

kustomize/kubeadm/join-orig/kustomization.yaml

kustomize/kubeadm/join-orig/audit.yaml

kustomize/kubeadm/join-orig/patch-control-plane.yaml

manifests/

manifests/troubleshoot.yaml

shared/

shared/kurl-util.tar

krew/

krew/support-bundle.yaml

krew/outdated.tar.gz

krew/outdated.yaml

krew/krew.tar.gz

krew/support-bundle.tar.gz

krew/preflight.yaml

krew/krew.yaml

krew/preflight.tar.gz

krew/index.tar

kurlkinds/

kurlkinds/cluster.kurl.sh_installers.yaml

helm/

helm/helmfile

helm/helm

This is downloading all the third party tools the tool relies on.

kubeadmandhelmare declared in docs and expectedkustomizeis standard, but also likely enforced by usingkubeadm- krew is a kubectl plugin provider, perhaps this is used for installing

KOTS. - kurlkinds is a collection of the CRD's required for

kURL.shto work manifestshas a rogue manifest that I'd like to readshared/kurl-util.tarperhaps has even more tools I'd like to look at

The troubleshoot.yaml is a bit of a meme, all that to make sure DNS isn't the issue! Joke's on them, DNS is always the issue!

apiVersion: v1

kind: Secret

metadata:

name: kurl-troubleshoot-spec

labels:

troubleshoot.io/kind: support-bundle

stringData:

support-bundle-spec: |

apiVersion: troubleshoot.sh/v1beta2

kind: SupportBundle

metadata:

name: kurl

spec:

collectors:

- configMap:

collectorName: coredns

name: coredns

namespace: kube-system

includeAllData: true

kurl-util.tar is actually a docker container:

$ tar -xvf kurl-util.tar

0446aeb87b57acf1a83e457052cb4cdf75ee964c725dcbe2280d42ad7db36928/

0446aeb87b57acf1a83e457052cb4cdf75ee964c725dcbe2280d42ad7db36928/VERSION

0446aeb87b57acf1a83e457052cb4cdf75ee964c725dcbe2280d42ad7db36928/json

0446aeb87b57acf1a83e457052cb4cdf75ee964c725dcbe2280d42ad7db36928/layer.tar

3a28ce1e8d8ac90a3767856d4cf67edc3c7790931c54be63ebb5837315bf8191/

3a28ce1e8d8ac90a3767856d4cf67edc3c7790931c54be63ebb5837315bf8191/VERSION

3a28ce1e8d8ac90a3767856d4cf67edc3c7790931c54be63ebb5837315bf8191/json

3a28ce1e8d8ac90a3767856d4cf67edc3c7790931c54be63ebb5837315bf8191/layer.tar

75d62f1b868dec0839dee2f1d3251555a36e6e9b8b039734b76737e9a5d0f074.json

e68320df7ef742118354d3e8edcb46eccdbfc2b1b55398a19aa8427026c100e5/

e68320df7ef742118354d3e8edcb46eccdbfc2b1b55398a19aa8427026c100e5/VERSION

e68320df7ef742118354d3e8edcb46eccdbfc2b1b55398a19aa8427026c100e5/json

e68320df7ef742118354d3e8edcb46eccdbfc2b1b55398a19aa8427026c100e5/layer.tar

manifest.json

repositories

$ cat manifest.json

[{"Config":"75d62f1b868dec0839dee2f1d3251555a36e6e9b8b039734b76737e9a5d0f074.json","RepoTags":["replicated/kurl-util:v2023.09.12-0"],"Layers":["0446aeb87b57acf1a83e457052cb4cdf75ee964c725dcbe2280d42ad7db36928/layer.tar","3a28ce1e8d8ac90a3767856d4cf67edc3c7790931c54be63ebb5837315bf8191/layer.tar","e68320df7ef742118354d3e8edcb46eccdbfc2b1b55398a19aa8427026c100e5/layer.tar"]}]

It's not clear what the purpose of this container is, and I couldn't find much beyond some release notes on it. My best guess is this is executed in the cluster during installation to help with pre and post validation, and is removed after installation?

Nothing here seems too scary for a VM execution I suppose. I read through the file to make sure there were no sneaky commands hidden between function declarations; there wasn't. All the execution login is neatly packed into main():

function main() {

logStep "Running install with the argument(s): $*"

require_root_user

# ensure /usr/local/bin/kubectl-plugin is in the path

path_add "/usr/local/bin"

kubernetes_init_hostname

get_patch_yaml "$@"

maybe_read_kurl_config_from_cluster

if [ "$AIRGAP" = "1" ]; then

move_airgap_assets

fi

pushd_install_directory

yaml_airgap

proxy_bootstrap

download_util_binaries

get_machine_id

merge_yaml_specs

apply_bash_flag_overrides "$@"

parse_yaml_into_bash_variables

MASTER=1 # parse_yaml_into_bash_variables will unset master

prompt_license

export KUBECONFIG=/etc/kubernetes/admin.conf

is_ha

parse_kubernetes_target_version

discover full-cluster

report_install_start

setup_remote_commands_dirs

trap ctrl_c SIGINT # trap ctrl+c (SIGINT) and handle it by reporting that the user exited intentionally (along with the line/version/etc)

trap trap_report_error ERR # trap errors and handle it by reporting the error line and parent function

preflights

init_preflights

kubernetes_upgrade_preflight

common_prompts

journald_persistent

configure_proxy

configure_no_proxy_preinstall

${K8S_DISTRO}_addon_for_each addon_fetch

kubernetes_get_packages

preflights_require_host_packages

if [ -z "$CURRENT_KUBERNETES_VERSION" ]; then

host_preflights "1" "0" "0"

cluster_preflights "1" "0" "0"

else

host_preflights "1" "0" "1"

cluster_preflights "1" "0" "1"

fi

install_host_dependencies

get_common

setup_kubeadm_kustomize

rook_upgrade_maybe_report_upgrade_rook

kubernetes_pre_init

${K8S_DISTRO}_addon_for_each addon_pre_init

discover_pod_subnet

discover_service_subnet

configure_no_proxy

install_cri

kubernetes_configure_pause_image_upgrade

get_shared

report_upgrade_kubernetes

report_kubernetes_install

export SUPPORT_BUNDLE_READY=1 # allow ctrl+c and ERR traps to collect support bundles now that k8s is installed

kurl_init_config

maybe_set_kurl_cluster_uuid

kurl_install_support_bundle_configmap

${K8S_DISTRO}_addon_for_each addon_install

maybe_cleanup_rook

maybe_cleanup_longhorn

helmfile_sync

kubeadm_post_init

uninstall_docker

${K8S_DISTRO}_addon_for_each addon_post_init

check_proxy_config

rook_maybe_migrate_from_openebs

outro

package_cleanup

popd_install_directory

report_install_success

}

Here are the high-level steps that are happening:

- Script assertions

- Asset download

- Kustomize build

- Preform pre-flight validation on the system

- use

kudeadmto install the configured cluster - Install add-ons

- Execute post install validation

Okay fine, I'm not scared let's execute it!

# ./kurl.sh

⚙ Running install with the argument(s):

Downloading package kurl-bin-utils-v2023.09.12-0.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 191M 100 191M 0 0 56.9M 0 0:00:03 0:00:03 --:--:-- 56.9M

This application is incompatible with memory swapping enabled. Disable swap to continue? (Y/n) y

=> Running swapoff --all

=> Commenting swap entries in /etc/fstab

=> A backup of /etc/fstab has been made at /etc/fstab.bak

Changes have been made to /etc/fstab. We recommend reviewing them after completing this installation to ensure mounts are correctly configured.

✔ Swap disabled.

Failed to get unit file state for firewalld.service: No such file or directory

✔ Firewalld is either not enabled or not active.

SELinux is not installed: no configuration will be applied

The installer will use network interface 'ens18' (with IP address '10.10.0.22')

Not using proxy address

Downloading package containerd-1.6.22.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 474M 100 474M 0 0 61.0M 0 0:00:07 0:00:07 --:--:-- 67.3M

Downloading package flannel-0.22.2.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 28.6M 100 28.6M 0 0 44.1M 0 --:--:-- --:--:-- --:--:-- 44.0M

Downloading package ekco-0.28.3.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 78.6M 100 78.6M 0 0 39.9M 0 0:00:01 0:00:01 --:--:-- 39.9M

Downloading package openebs-3.8.0.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 52.0M 100 52.0M 0 0 44.8M 0 0:00:01 0:00:01 --:--:-- 44.8M

Downloading package minio-2023-09-04T19-57-37Z.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 93.2M 100 93.2M 0 0 43.4M 0 0:00:02 0:00:02 --:--:-- 43.4M

Downloading package contour-1.25.2.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 63.8M 100 63.8M 0 0 58.2M 0 0:00:01 0:00:01 --:--:-- 58.2M

Downloading package registry-2.8.2.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 28.4M 100 28.4M 0 0 53.2M 0 --:--:-- --:--:-- --:--:-- 53.1M

Downloading package prometheus-0.67.1-50.3.1.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 330M 100 330M 0 0 59.4M 0 0:00:05 0:00:05 --:--:-- 59.9M

Downloading package kubernetes-1.27.5.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 926M 100 926M 0 0 59.8M 0 0:00:15 0:00:15 --:--:-- 60.6M

⚙ Running host preflights

[TCP Port Status] Running collector...

[CPU Info] Running collector...

[Amount of Memory] Running collector...

[Block Devices] Running collector...

[Host OS Info] Running collector...

[Ephemeral Disk Usage /var/lib/kubelet] Running collector...

[Ephemeral Disk Usage /var/lib/containerd] Running collector...

[Ephemeral Disk Usage /var/openebs] Running collector...

[Kubernetes API TCP Port Status] Running collector...

[ETCD Client API TCP Port Status] Running collector...

[ETCD Server API TCP Port Status] Running collector...

[ETCD Health Server TCP Port Status] Running collector...

[Kubelet Health Server TCP Port Status] Running collector...

[Kubelet API TCP Port Status] Running collector...

[Kube Controller Manager Health Server TCP Port Status] Running collector...

[Kube Scheduler Health Server TCP Port Status] Running collector...

[Filesystem Latency Two Minute Benchmark] Running collector...

[Host OS Info] Running collector...

[Flannel UDP port 8472] Running collector...

[Node Exporter Metrics Server TCP Port Status] Running collector...

[Host OS Info] Running collector...

I0912 19:58:27.065461 2845 analyzer.go:77] excluding "Certificate Key Pair" analyzer

I0912 19:58:27.065501 2845 analyzer.go:77] excluding "Certificate Key Pair" analyzer

I0912 19:58:27.065531 2845 analyzer.go:77] excluding "Kubernetes API Health" analyzer

I0912 19:58:27.065619 2845 analyzer.go:77] excluding "Ephemeral Disk Usage /var/lib/docker" analyzer

I0912 19:58:27.065664 2845 analyzer.go:77] excluding "Ephemeral Disk Usage /var/lib/rook" analyzer

I0912 19:58:27.065703 2845 analyzer.go:77] excluding "Kubernetes API Server Load Balancer" analyzer

I0912 19:58:27.065723 2845 analyzer.go:77] excluding "Kubernetes API Server Load Balancer Upgrade" analyzer

I0912 19:58:27.065831 2845 analyzer.go:77] excluding "Kubernetes API TCP Connection Status" analyzer

I0912 19:58:27.066115 2845 analyzer.go:77] excluding "Docker Support" analyzer

I0912 19:58:27.066154 2845 analyzer.go:77] excluding "Containerd and Weave Compatibility" analyzer

[PASS] Number of CPUs: This server has at least 4 CPU cores

[PASS] Amount of Memory: The system has at least 8G of memory

[PASS] Ephemeral Disk Usage /var/lib/kubelet: The disk containing directory /var/lib/kubelet has at least 30Gi of total space, has at least 10Gi of disk space available, and is less than 60% full

[PASS] Ephemeral Disk Usage /var/lib/containerd: The disk containing directory /var/lib/containerd has at least 30Gi of total space, has at least 10Gi of disk space available, and is less than 60% full.

[PASS] Ephemeral Disk Usage /var/openebs: The disk containing directory /var/openebs has sufficient space

[PASS] Kubernetes API TCP Port Status: Port 6443 is open

[PASS] ETCD Client API TCP Port Status: Port 2379 is open

[PASS] ETCD Server API TCP Port Status: Port 2380 is open

[PASS] ETCD Health Server TCP Port Status: Port 2381 is available

[PASS] Kubelet Health Server TCP Port Status: Port 10248 is available

[PASS] Kubelet API TCP Port Status: Port 10250 is open

[PASS] Kube Controller Manager Health Server TCP Port Status: Port 10257 is available

[PASS] Kube Scheduler Health Server TCP Port Status: Port 10259 is available

[WARN] Filesystem Performance: Write latency is high. p99 target < 10ms, actual:

Min: 24.894478ms

Max: 574.916396ms

Avg: 133.88821ms

p1: 38.901358ms

p5: 50.405301ms

p10: 64.855105ms

p20: 83.231062ms

p30: 91.666307ms

p40: 108.333184ms

p50: 116.829311ms

p60: 133.323897ms

p70: 150.473231ms

p80: 175.048333ms

p90: 224.82721ms

p95: 274.80427ms

p99: 391.624767ms

p99.5: 441.49694ms

p99.9: 574.916396ms

p99.95: 574.916396ms

p99.99: 574.916396ms

[PASS] NTP Status: System clock is synchronized

[PASS] NTP Status: Timezone is set to UTC

[PASS] Host OS Info: containerd addon supports ubuntu 20.04

[PASS] Flannel UDP port 8472 status: Port is open

[PASS] Node Exporter Metrics Server TCP Port Status: Port 9100 is available

[PASS] Host OS Info: containerd addon supports ubuntu 20.04

Host preflights have warnings

It is highly recommended to sort out the warning conditions before proceeding.

Be aware that continuing with preflight warnings can result in failures.

Would you like to continue?

(Y/n)

Dang, I know my disks are aweful, this is an active problem in my Lab. Pretty nice that their script picked up on that.

Found pod network: 10.32.0.0/20

Found service network: 10.96.0.0/22

⚙ Addon containerd 1.6.22

⚙ Installing host packages containerd.io

Selecting previously unselected package containerd.io.

(Reading database ... 71974 files and directories currently installed.)

Preparing to unpack .../containerd.io_1.6.22-1_amd64.deb ...

Unpacking containerd.io (1.6.22-1) ...

Setting up containerd.io (1.6.22-1) ...

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /lib/systemd/system/containerd.service.

Processing triggers for man-db (2.9.1-1) ...

✔ Host packages containerd.io installed

⚙ Checking package manager status

Reading package lists...

Building dependency tree...

Reading state information...

✔ Status checked successfully. No broken packages were found.

⚙ Containerd configuration

Enabling containerd

Enabling containerd crictl

Checks if the file /etc/crictl.yaml exist

Creates /etc/crictl.yaml

Checking for containerd custom settings

Creating /etc/systemd/system/containerd.service.d

Checking for proxy set

Checking registry configuration for the distro kubeadm and if Docker registry IP is set

Checking if containerd requires to be re-started

Re-starting containerd

- Restarting containerd...

Checking if containerd was restarted successfully

✔ Service containerd restarted.

✔ Containerd is successfully configured

Checking if is required to migrate images from Docker

unpacking registry.k8s.io/pause:3.6 (sha256:79b611631c0d19e9a975fb0a8511e5153789b4c26610d1842e9f735c57cc8b13)...done

k8s.gcr.io/pause:3.6

Checking if the kubelet service is enabled

unpacking docker.io/replicated/kurl-util:v2023.09.12-0 (sha256:1954a4d769554c719e2c7f43c0abed534b3e81027d34c5a32357f97fdff5f125)...done

Adding kernel module br_netfilter

* Applying /etc/sysctl.d/10-console-messages.conf ...

kernel.printk = 4 4 1 7

* Applying /etc/sysctl.d/10-ipv6-privacy.conf ...

net.ipv6.conf.all.use_tempaddr = 2

net.ipv6.conf.default.use_tempaddr = 2

* Applying /etc/sysctl.d/10-kernel-hardening.conf ...

kernel.kptr_restrict = 1

* Applying /etc/sysctl.d/10-link-restrictions.conf ...

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/10-magic-sysrq.conf ...

kernel.sysrq = 176

* Applying /etc/sysctl.d/10-network-security.conf ...

net.ipv4.conf.default.rp_filter = 2

net.ipv4.conf.all.rp_filter = 2

* Applying /etc/sysctl.d/10-ptrace.conf ...

kernel.yama.ptrace_scope = 1

* Applying /etc/sysctl.d/10-zeropage.conf ...

vm.mmap_min_addr = 65536

* Applying /usr/lib/sysctl.d/50-default.conf ...

net.ipv4.conf.default.promote_secondaries = 1

sysctl: setting key "net.ipv4.conf.all.promote_secondaries": Invalid argument

net.ipv4.ping_group_range = 0 2147483647

net.core.default_qdisc = fq_codel

fs.protected_regular = 1

fs.protected_fifos = 1

* Applying /usr/lib/sysctl.d/50-pid-max.conf ...

kernel.pid_max = 4194304

* Applying /etc/sysctl.d/99-replicated-ipv4.conf ...

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.conf.all.forwarding = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /usr/lib/sysctl.d/protect-links.conf ...

fs.protected_fifos = 1

fs.protected_hardlinks = 1

fs.protected_regular = 2

fs.protected_symlinks = 1

* Applying /etc/sysctl.conf ...

Adding kernel modules ip_vs, ip_vs_rr, ip_vs_wrr, and ip_vs_sh

⚙ Install kubelet, kubectl and cni host packages

kubelet command missing - will install host components

⚙ Installing host packages kubelet-1.27.5 kubectl-1.27.5 git

Selecting previously unselected package conntrack.

(Reading database ... 71990 files and directories currently installed.)

Preparing to unpack .../conntrack_1%3a1.4.5-2_amd64.deb ...

Unpacking conntrack (1:1.4.5-2) ...

Selecting previously unselected package ebtables.

Preparing to unpack .../ebtables_2.0.11-3build1_amd64.deb ...

Unpacking ebtables (2.0.11-3build1) ...

Preparing to unpack .../ethtool_1%3a5.4-1_amd64.deb ...

Unpacking ethtool (1:5.4-1) over (1:5.4-1) ...

Preparing to unpack .../git_1%3a2.25.1-1ubuntu3.11_amd64.deb ...

Unpacking git (1:2.25.1-1ubuntu3.11) over (1:2.25.1-1ubuntu3.11) ...

Preparing to unpack .../git-man_1%3a2.25.1-1ubuntu3.11_all.deb ...

Unpacking git-man (1:2.25.1-1ubuntu3.11) over (1:2.25.1-1ubuntu3.11) ...

Preparing to unpack .../iproute2_5.5.0-1ubuntu1_amd64.deb ...

Unpacking iproute2 (5.5.0-1ubuntu1) over (5.5.0-1ubuntu1) ...

Preparing to unpack .../iptables_1.8.4-3ubuntu2.1_amd64.deb ...

Unpacking iptables (1.8.4-3ubuntu2.1) over (1.8.4-3ubuntu2) ...

Preparing to unpack .../kmod_27-1ubuntu2.1_amd64.deb ...

Unpacking kmod (27-1ubuntu2.1) over (27-1ubuntu2) ...

Selecting previously unselected package kubectl.

Preparing to unpack .../kubectl_1.27.5-00_amd64.deb ...

Unpacking kubectl (1.27.5-00) ...

Selecting previously unselected package kubelet.

Preparing to unpack .../kubelet_1.27.5-00_amd64.deb ...

Unpacking kubelet (1.27.5-00) ...

Selecting previously unselected package kubernetes-cni.

Preparing to unpack .../kubernetes-cni_1.2.0-00_amd64.deb ...

Unpacking kubernetes-cni (1.2.0-00) ...

Preparing to unpack .../less_551-1ubuntu0.1_amd64.deb ...

Unpacking less (551-1ubuntu0.1) over (551-1ubuntu0.1) ...

Preparing to unpack .../libatm1_1%3a2.5.1-4_amd64.deb ...

Unpacking libatm1:amd64 (1:2.5.1-4) over (1:2.5.1-4) ...

Preparing to unpack .../libbsd0_0.10.0-1_amd64.deb ...

Unpacking libbsd0:amd64 (0.10.0-1) over (0.10.0-1) ...

Preparing to unpack .../libcap2_1%3a2.32-1ubuntu0.1_amd64.deb ...

Unpacking libcap2:amd64 (1:2.32-1ubuntu0.1) over (1:2.32-1ubuntu0.1) ...

Preparing to unpack .../libcap2-bin_1%3a2.32-1ubuntu0.1_amd64.deb ...

Unpacking libcap2-bin (1:2.32-1ubuntu0.1) over (1:2.32-1ubuntu0.1) ...

Preparing to unpack .../libcbor0.6_0.6.0-0ubuntu1_amd64.deb ...

Unpacking libcbor0.6:amd64 (0.6.0-0ubuntu1) over (0.6.0-0ubuntu1) ...

Preparing to unpack .../libcurl3-gnutls_7.68.0-1ubuntu2.19_amd64.deb ...

Unpacking libcurl3-gnutls:amd64 (7.68.0-1ubuntu2.19) over (7.68.0-1ubuntu2.19) ...

Preparing to unpack .../libedit2_3.1-20191231-1_amd64.deb ...

Unpacking libedit2:amd64 (3.1-20191231-1) over (3.1-20191231-1) ...

dpkg: warning: downgrading libelf1:amd64 from 0.176-1.1ubuntu0.1 to 0.176-1.1build1

Preparing to unpack .../libelf1_0.176-1.1build1_amd64.deb ...

Unpacking libelf1:amd64 (0.176-1.1build1) over (0.176-1.1ubuntu0.1) ...

Preparing to unpack .../liberror-perl_0.17029-1_all.deb ...

Unpacking liberror-perl (0.17029-1) over (0.17029-1) ...

Preparing to unpack .../libexpat1_2.2.9-1ubuntu0.6_amd64.deb ...

Unpacking libexpat1:amd64 (2.2.9-1ubuntu0.6) over (2.2.9-1ubuntu0.6) ...

Preparing to unpack .../libfido2-1_1.3.1-1ubuntu2_amd64.deb ...

Unpacking libfido2-1:amd64 (1.3.1-1ubuntu2) over (1.3.1-1ubuntu2) ...

Preparing to unpack .../libgdbm6_1.18.1-5_amd64.deb ...

Unpacking libgdbm6:amd64 (1.18.1-5) over (1.18.1-5) ...

Preparing to unpack .../libgdbm-compat4_1.18.1-5_amd64.deb ...

Unpacking libgdbm-compat4:amd64 (1.18.1-5) over (1.18.1-5) ...

Preparing to unpack .../libip4tc2_1.8.4-3ubuntu2.1_amd64.deb ...

Unpacking libip4tc2:amd64 (1.8.4-3ubuntu2.1) over (1.8.4-3ubuntu2) ...

Preparing to unpack .../libip6tc2_1.8.4-3ubuntu2.1_amd64.deb ...

Unpacking libip6tc2:amd64 (1.8.4-3ubuntu2.1) over (1.8.4-3ubuntu2) ...

Preparing to unpack .../libkmod2_27-1ubuntu2.1_amd64.deb ...

Unpacking libkmod2:amd64 (27-1ubuntu2.1) over (27-1ubuntu2) ...

Preparing to unpack .../libmnl0_1.0.4-2_amd64.deb ...

Unpacking libmnl0:amd64 (1.0.4-2) over (1.0.4-2) ...

Preparing to unpack .../libnetfilter-conntrack3_1.0.7-2_amd64.deb ...

Unpacking libnetfilter-conntrack3:amd64 (1.0.7-2) over (1.0.7-2) ...

Preparing to unpack .../libnfnetlink0_1.0.1-3build1_amd64.deb ...

Unpacking libnfnetlink0:amd64 (1.0.1-3build1) over (1.0.1-3build1) ...

Preparing to unpack .../libnftnl11_1.1.5-1_amd64.deb ...

Unpacking libnftnl11:amd64 (1.1.5-1) over (1.1.5-1) ...

Preparing to unpack .../libpam-cap_1%3a2.32-1ubuntu0.1_amd64.deb ...

Unpacking libpam-cap:amd64 (1:2.32-1ubuntu0.1) over (1:2.32-1ubuntu0.1) ...

Preparing to unpack .../libperl5.30_5.30.0-9ubuntu0.4_amd64.deb ...

Unpacking libperl5.30:amd64 (5.30.0-9ubuntu0.4) over (5.30.0-9ubuntu0.4) ...

Preparing to unpack .../libwrap0_7.6.q-30_amd64.deb ...

Unpacking libwrap0:amd64 (7.6.q-30) over (7.6.q-30) ...

Preparing to unpack .../libx11-6_2%3a1.6.9-2ubuntu1.5_amd64.deb ...

Unpacking libx11-6:amd64 (2:1.6.9-2ubuntu1.5) over (2:1.6.9-2ubuntu1.5) ...

Preparing to unpack .../libx11-data_2%3a1.6.9-2ubuntu1.5_all.deb ...

Unpacking libx11-data (2:1.6.9-2ubuntu1.5) over (2:1.6.9-2ubuntu1.5) ...

Preparing to unpack .../libxau6_1%3a1.0.9-0ubuntu1_amd64.deb ...

Unpacking libxau6:amd64 (1:1.0.9-0ubuntu1) over (1:1.0.9-0ubuntu1) ...

Preparing to unpack .../libxcb1_1.14-2_amd64.deb ...

Unpacking libxcb1:amd64 (1.14-2) over (1.14-2) ...

Preparing to unpack .../libxdmcp6_1%3a1.1.3-0ubuntu1_amd64.deb ...

Unpacking libxdmcp6:amd64 (1:1.1.3-0ubuntu1) over (1:1.1.3-0ubuntu1) ...

Preparing to unpack .../libxext6_2%3a1.3.4-0ubuntu1_amd64.deb ...

Unpacking libxext6:amd64 (2:1.3.4-0ubuntu1) over (2:1.3.4-0ubuntu1) ...

Preparing to unpack .../libxmuu1_2%3a1.1.3-0ubuntu1_amd64.deb ...

Unpacking libxmuu1:amd64 (2:1.1.3-0ubuntu1) over (2:1.1.3-0ubuntu1) ...

Preparing to unpack .../libxtables12_1.8.4-3ubuntu2.1_amd64.deb ...

Unpacking libxtables12:amd64 (1.8.4-3ubuntu2.1) over (1.8.4-3ubuntu2) ...

Preparing to unpack .../netbase_6.1_all.deb ...

Unpacking netbase (6.1) over (6.1) ...

Preparing to unpack .../openssh-client_1%3a8.2p1-4ubuntu0.9_amd64.deb ...

Unpacking openssh-client (1:8.2p1-4ubuntu0.9) over (1:8.2p1-4ubuntu0.9) ...

Preparing to unpack .../patch_2.7.6-6_amd64.deb ...

Unpacking patch (2.7.6-6) over (2.7.6-6) ...

Preparing to unpack .../perl_5.30.0-9ubuntu0.4_amd64.deb ...

Unpacking perl (5.30.0-9ubuntu0.4) over (5.30.0-9ubuntu0.4) ...

Preparing to unpack .../perl-modules-5.30_5.30.0-9ubuntu0.4_all.deb ...

Unpacking perl-modules-5.30 (5.30.0-9ubuntu0.4) over (5.30.0-9ubuntu0.4) ...

Selecting previously unselected package socat.

Preparing to unpack .../socat_1.7.3.3-2_amd64.deb ...

Unpacking socat (1.7.3.3-2) ...

Preparing to unpack .../xauth_1%3a1.1-0ubuntu1_amd64.deb ...

Unpacking xauth (1:1.1-0ubuntu1) over (1:1.1-0ubuntu1) ...

Setting up ethtool (1:5.4-1) ...

Setting up git-man (1:2.25.1-1ubuntu3.11) ...

Setting up kubectl (1.27.5-00) ...

Setting up kubernetes-cni (1.2.0-00) ...

Setting up less (551-1ubuntu0.1) ...

Setting up libatm1:amd64 (1:2.5.1-4) ...

Setting up libbsd0:amd64 (0.10.0-1) ...

Setting up libcap2:amd64 (1:2.32-1ubuntu0.1) ...

Setting up libcap2-bin (1:2.32-1ubuntu0.1) ...

Setting up libcbor0.6:amd64 (0.6.0-0ubuntu1) ...

Setting up libcurl3-gnutls:amd64 (7.68.0-1ubuntu2.19) ...

Setting up libedit2:amd64 (3.1-20191231-1) ...

Setting up libelf1:amd64 (0.176-1.1build1) ...

Setting up libexpat1:amd64 (2.2.9-1ubuntu0.6) ...

Setting up libfido2-1:amd64 (1.3.1-1ubuntu2) ...

Setting up libgdbm6:amd64 (1.18.1-5) ...

Setting up libgdbm-compat4:amd64 (1.18.1-5) ...

Setting up libip4tc2:amd64 (1.8.4-3ubuntu2.1) ...

Setting up libip6tc2:amd64 (1.8.4-3ubuntu2.1) ...

Setting up libkmod2:amd64 (27-1ubuntu2.1) ...

Setting up libmnl0:amd64 (1.0.4-2) ...

Setting up libnfnetlink0:amd64 (1.0.1-3build1) ...

Setting up libnftnl11:amd64 (1.1.5-1) ...

Setting up libpam-cap:amd64 (1:2.32-1ubuntu0.1) ...

Setting up libwrap0:amd64 (7.6.q-30) ...

Setting up libx11-data (2:1.6.9-2ubuntu1.5) ...

Setting up libxau6:amd64 (1:1.0.9-0ubuntu1) ...

Setting up libxdmcp6:amd64 (1:1.1.3-0ubuntu1) ...

Setting up libxtables12:amd64 (1.8.4-3ubuntu2.1) ...

Setting up netbase (6.1) ...

Setting up openssh-client (1:8.2p1-4ubuntu0.9) ...

Setting up patch (2.7.6-6) ...

Setting up perl-modules-5.30 (5.30.0-9ubuntu0.4) ...

Setting up socat (1.7.3.3-2) ...

Setting up ebtables (2.0.11-3build1) ...

Setting up iproute2 (5.5.0-1ubuntu1) ...

Setting up kmod (27-1ubuntu2.1) ...

update-initramfs: deferring update (trigger activated)

Setting up libnetfilter-conntrack3:amd64 (1.0.7-2) ...

Setting up libperl5.30:amd64 (5.30.0-9ubuntu0.4) ...

Setting up libxcb1:amd64 (1.14-2) ...

Setting up perl (5.30.0-9ubuntu0.4) ...

Setting up conntrack (1:1.4.5-2) ...

Setting up iptables (1.8.4-3ubuntu2.1) ...

Setting up kubelet (1.27.5-00) ...

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /lib/systemd/system/kubelet.service.

Setting up liberror-perl (0.17029-1) ...

Setting up libx11-6:amd64 (2:1.6.9-2ubuntu1.5) ...

Setting up libxext6:amd64 (2:1.3.4-0ubuntu1) ...

Setting up libxmuu1:amd64 (2:1.1.3-0ubuntu1) ...

Setting up xauth (1:1.1-0ubuntu1) ...

Setting up git (1:2.25.1-1ubuntu3.11) ...

Processing triggers for man-db (2.9.1-1) ...

Processing triggers for systemd (245.4-4ubuntu3.20) ...

Processing triggers for mime-support (3.64ubuntu1) ...

Processing triggers for libc-bin (2.31-0ubuntu9.7) ...

Processing triggers for initramfs-tools (0.136ubuntu6.3) ...

update-initramfs: Generating /boot/initrd.img-5.4.0-162-generic

✔ Host packages kubelet-1.27.5 kubectl-1.27.5 git installed

⚙ Checking package manager status

Reading package lists...

Building dependency tree...

Reading state information...

✔ Status checked successfully. No broken packages were found.

Restarting Kubelet

✔ Kubernetes host packages installed

unpacking registry.k8s.io/kube-proxy:v1.27.5 (sha256:85470805f26fda2af581349496a7f0d7aa7249cf938343de764ccde8b138cb67)...done

unpacking registry.k8s.io/coredns/coredns:v1.10.1 (sha256:b64ac4c9100dd8ba255f2a31c2ad165f062e2cf087af9b6ffc68910e90de55d8)...done

unpacking registry.k8s.io/pause:3.9 (sha256:c22930374586e8889f175371d1057a5e7b9a28c3876b1226aa8cdf9ef715633b)...done

unpacking registry.k8s.io/kube-apiserver:v1.27.5 (sha256:ffdc02f8a270ae3b59fa032927154b05c63737fe23ef6c5e115ff98504326418)...done

unpacking registry.k8s.io/kube-controller-manager:v1.27.5 (sha256:b434d7b6f2846c5dbf65c141a703cf84b06c5644139391d236d56e646da8efb7)...done

unpacking registry.k8s.io/etcd:3.5.7-0 (sha256:20b0064403a9a449896ca9ef94e6d02b1e6c3f68e0cee79f7b5ae066d9eb9cc2)...done

unpacking registry.k8s.io/kube-scheduler:v1.27.5 (sha256:34c4c19ebf3ebfd85e9b13d60ffa27f3d552e4edd038a9a7bf5c3bc51c166cf7)...done

k8s.gcr.io/coredns/coredns:v1.10.1

k8s.gcr.io/etcd:3.5.7-0

k8s.gcr.io/kube-apiserver:v1.27.5

k8s.gcr.io/kube-controller-manager:v1.27.5

k8s.gcr.io/kube-proxy:v1.27.5

k8s.gcr.io/kube-scheduler:v1.27.5

k8s.gcr.io/pause:3.9

/var/lib/kurl/krew /var/lib/kurl ~

/var/lib/kurl ~

/var/lib/kurl/packages/kubernetes/1.27.5/assets /var/lib/kurl ~

/var/lib/kurl ~

unpacking registry.k8s.io/pause:3.6 (sha256:79b611631c0d19e9a975fb0a8511e5153789b4c26610d1842e9f735c57cc8b13)...done

registry.k8s.io/coredns:v1.10.1

k8s.gcr.io/coredns:v1.10.1

unpacking docker.io/flannel/flannel:v0.22.2 (sha256:b0e87c28fac2ee7e154019a79be409ffcecb2203c0360848bd58261d7637f6e6)...done

unpacking docker.io/flannel/flannel-cni-plugin:v1.2.0 (sha256:7ed685e87806deacfe7537495ac4bb13bf9bfdcb9af62de2c8071bb6718a4f19)...done

unpacking docker.io/library/haproxy:2.8.3-alpine3.18 (sha256:6b995e8244877b8f5a0bc53c6058cf323ae623234ae3ca3f209c934cbaf70402)...done

unpacking docker.io/replicated/ekco:v0.28.3 (sha256:9b10dc9f669d32daa736abb1dc3a68f5635523c68567c9cfe1608b52ac29e0a6)...done

unpacking docker.io/openebs/linux-utils:3.4.0 (sha256:70c5cef26626f9239a33f93abe488030229d5e40557e2eb29cf6a58be337ea4d)...done

unpacking docker.io/openebs/provisioner-localpv:3.4.0 (sha256:892165c408cc2d7a76e306e9b85d3805ec1f86b8515822f9e8837f0d794993ab)...done

unpacking docker.io/minio/minio:RELEASE.2023-09-04T19-57-37Z (sha256:88ce507f6ca0fe146d9f30f7835752199900ab2e28047a8a96a982942e50bebb)...done

unpacking docker.io/envoyproxy/envoy:v1.26.4 (sha256:45b8e22aebc94cfcbc939180e58a03c4301bde8811826761239c71cd7c91a38c)...done

unpacking ghcr.io/projectcontour/contour:v1.25.2 (sha256:9d86ec004463b40a79cd236431258ec2e516fa41dfc2dbc9116daca229d726d6)...done

unpacking docker.io/library/registry:2.8.2 (sha256:3556e52588ae78b5778a1b4c53ded875af4ebd239f2032a2f335be4fb0f00025)...done

unpacking docker.io/kurlsh/s3cmd:20230406-9a6d89f (sha256:5e33493388c7e2eb04483813f4d42b371564d147d61bb62d3594d2c75b4dbce9)...done

unpacking quay.io/prometheus-operator/prometheus-operator:v0.67.1 (sha256:1ef4ad4d0cf569af5a28e26282df800e5c888a8cb10d38b42b02b4fd31364261)...done

unpacking quay.io/prometheus/alertmanager:v0.26.0 (sha256:25b47d4522c39e36865a58bb884eb49e51b80f3b18dfe8a885fee31d7fd3dedc)...done

unpacking quay.io/prometheus-operator/prometheus-config-reloader:v0.67.1 (sha256:a3341d7449675c213429f039de8ffdd2a9611b310f38e83fa178a21be67e5c2f)...done

unpacking quay.io/prometheus/prometheus:v2.46.0 (sha256:f5f3df700bc84cfdc98653da878f0942b7e995e57ddad3036dbbbc6ab0d83946)...done

unpacking quay.io/prometheus/node-exporter:v1.6.1 (sha256:9c43c73493f9e43ffa3261fbf2c9a67060d2883152d77be207f7da76ffd04979)...done

unpacking quay.io/kiwigrid/k8s-sidecar:1.24.6 (sha256:b55392685b8be84d6617f1cc1ace9b91830b7e6cc0bc1c1be09f311d3bc8d52c)...done

unpacking docker.io/grafana/grafana:10.1.1 (sha256:232c28e60a0a3dd77ca051c78e586e63b9aa704a299039b567c2690ab4b092a1)...done

unpacking registry.k8s.io/prometheus-adapter/prometheus-adapter:v0.11.0 (sha256:fd51baccf80e7d4a2fc5f3630bb339e5decd5f3def3f19adf47e7384c6993708)...done

unpacking registry.k8s.io/kube-state-metrics/kube-state-metrics:v2.10.0 (sha256:aa6ca9a4ea87c95b3d05c476832969f3df69802e4bcf6ec061b044c05b7db5fa)...done

k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.10.0

k8s.gcr.io/prometheus-adapter/prometheus-adapter:v0.11.0

⚙ Initialize Kubernetes

⚙ generate kubernetes bootstrap token

Kubernetes bootstrap token: q3mmya.iaar7nxgt9nu63rv

This token will expire in 24 hours

[init] Using Kubernetes version: v1.27.5

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

W0912 20:09:28.908467 16730 checks.go:835] detected that the sandbox image "registry.k8s.io/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.k8s.io/pause:3.9" as the CRI sandbox image.

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local repicated-demo-primary] and IPs [10.96.0.1 10.10.0.22]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost repicated-demo-primary] and IPs [10.10.0.22 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost repicated-demo-primary] and IPs [10.10.0.22 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 11.505059 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node repicated-demo-primary as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[bootstrap-token] Using token: q3mmya.iaar7nxgt9nu63rv

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.10.0.22:6443 --token q3mmya.iaar7nxgt9nu63rv \

--discovery-token-ca-cert-hash sha256:acfb88df354da90b7ff8c17c29c87673e2514611d3048710e49795a13ad9e2c7

node/repicated-demo-primary already uncordoned

Waiting for kubernetes api health to report ok

node/repicated-demo-primary labeled

✔ Kubernetes Master Initialized

Kubernetes control plane is running at https://10.10.0.22:6443

CoreDNS is running at https://10.10.0.22:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

namespace/kurl created

✔ Cluster Initialized

Applying resources

service/registry created

secret/kurl-troubleshoot-spec created

customresourcedefinition.apiextensions.k8s.io/installers.cluster.kurl.sh created

installer.cluster.kurl.sh/latest created

installer.cluster.kurl.sh/latest labeled

configmap/kurl-last-config created

configmap/kurl-current-config created

configmap/kurl-current-config patched

configmap/kurl-cluster-uuid created

secret/kurl-supportbundle-spec created

⚙ Addon flannel 0.22.2

# Warning: 'patchesStrategicMerge' is deprecated. Please use 'patches' instead. Run 'kustomize edit fix' to update your Kustomization automatically.

namespace/kube-flannel created

serviceaccount/flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

configmap/kube-flannel-cfg created

secret/flannel-troubleshoot-spec created

daemonset.apps/kube-flannel-ds created

- Waiting up to 5 minutes for Flannel to become healthy

✔ Flannel is healthy

⚙ Checking cluster networking

Waiting up to 10 minutes for node to report Ready

service/kurlnet created

pod/kurlnet-server created

pod/kurlnet-client created

Waiting for kurlnet-client pod to start

Warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

pod "kurlnet-client" force deleted

pod "kurlnet-server" force deleted

service "kurlnet" deleted

configmap/kurl-current-config patched

⚙ Addon ekco 0.28.3

namespace/minio created

namespace/kurl configured

serviceaccount/ekco created

role.rbac.authorization.k8s.io/ekco-rotate-certs created

clusterrole.rbac.authorization.k8s.io/ekco-cluster created

clusterrole.rbac.authorization.k8s.io/ekco-kube-system created

clusterrole.rbac.authorization.k8s.io/ekco-kurl created

clusterrole.rbac.authorization.k8s.io/ekco-minio created

clusterrole.rbac.authorization.k8s.io/ekco-pvmigrate created

clusterrole.rbac.authorization.k8s.io/ekco-velero created

rolebinding.rbac.authorization.k8s.io/ekco-rotate-certs created

clusterrolebinding.rbac.authorization.k8s.io/ekco-cluster created

configmap/ekco-config created

service/ekc-operator created

deployment.apps/ekc-operator created

rolebinding.rbac.authorization.k8s.io/ekco-kube-system created

clusterrolebinding.rbac.authorization.k8s.io/ekco-pvmigrate created

clusterrolebinding.rbac.authorization.k8s.io/ekco-kurl created

clusterrolebinding.rbac.authorization.k8s.io/ekco-velero created

rolebinding.rbac.authorization.k8s.io/ekco-minio created

pod "ekc-operator-59c76d5b89-6sgnw" deleted

⚙ Installing ekco reboot service

Created symlink /etc/systemd/system/multi-user.target.wants/ekco-reboot.service → /etc/systemd/system/ekco-reboot.service.

✔ ekco reboot service installed

⚙ Installing ekco purge node command

✔ ekco purge node command installed

configmap/kurl-current-config patched

⚙ Addon openebs 3.8.0

customresourcedefinition.apiextensions.k8s.io/blockdeviceclaims.openebs.io created

customresourcedefinition.apiextensions.k8s.io/blockdevices.openebs.io created

namespace/openebs created

serviceaccount/openebs created

clusterrole.rbac.authorization.k8s.io/openebs created

clusterrolebinding.rbac.authorization.k8s.io/openebs created

deployment.apps/openebs-localpv-provisioner created

⚙ Waiting for OpenEBS CustomResourceDefinitions to be ready

✔ OpenEBS CustomResourceDefinitions are ready

OpenEBS LocalPV will be installed as the default storage class.

storageclass.storage.k8s.io/local created

configmap/kurl-current-config patched

⚙ Addon minio 2023-09-04T19-57-37Z

namespace/minio configured

secret/minio-credentials created

service/ha-minio created

service/minio created

persistentvolumeclaim/minio-pv-claim created

deployment.apps/minio created

statefulset.apps/ha-minio created

awaiting minio deployment

awaiting minio readiness

awaiting minio endpoint

awaiting minio deployment

awaiting minio readiness

awaiting minio endpoint

configmap/kurl-current-config patched

⚙ Addon contour 1.25.2

namespace/projectcontour created

# Warning: 'patchesStrategicMerge' is deprecated. Please use 'patches' instead. Run 'kustomize edit fix' to update your Kustomization automatically.

namespace/projectcontour configured

customresourcedefinition.apiextensions.k8s.io/contourconfigurations.projectcontour.io created

customresourcedefinition.apiextensions.k8s.io/contourdeployments.projectcontour.io created

customresourcedefinition.apiextensions.k8s.io/extensionservices.projectcontour.io created

customresourcedefinition.apiextensions.k8s.io/httpproxies.projectcontour.io created

customresourcedefinition.apiextensions.k8s.io/tlscertificatedelegations.projectcontour.io created

serviceaccount/contour created

serviceaccount/contour-certgen created

serviceaccount/envoy created

role.rbac.authorization.k8s.io/contour created

role.rbac.authorization.k8s.io/contour-certgen created

clusterrole.rbac.authorization.k8s.io/contour created

rolebinding.rbac.authorization.k8s.io/contour created

rolebinding.rbac.authorization.k8s.io/contour-rolebinding created

clusterrolebinding.rbac.authorization.k8s.io/contour created

configmap/contour created

service/contour created

service/envoy created

deployment.apps/contour created

daemonset.apps/envoy created

Warning: metadata.name: this is used in Pod names and hostnames, which can result in surprising behavior; a DNS label is recommended: [must not contain dots]

job.batch/contour-certgen-v1.25.2 created

configmap/kurl-current-config patched

⚙ Addon registry 2.8.2

- Installing Registry

Applying resources

Adding secret

Checking if PVC and Object Store exists

PVC and Object Store were found. Creating Registry Deployment with Object Store data

object store bucket docker-registry created

Command "format-address" is deprecated, use 'kurl netutil format-ip-address' instead

Object Store IP: 10.96.0.160

Object Store Hostname: minio.minio

Checking if PVC migration will be required

✔ Registry installed successfully

configmap/registry-config created

configmap/registry-velero-config created

secret/registry-s3-secret created

secret/registry-session-secret created

service/registry configured

deployment.apps/registry created

- Configuring Registry

Checking if secrets exist

Deleting registry-htpasswd and registry-creds secrets

Generating password

Patching password

secret/registry-htpasswd created

secret/registry-htpasswd patched

secret/registry-creds created

secret/registry-creds patched

Secrets configured successfully

Checking Docker Registry: 10.96.2.205

/var/lib/kurl/addons/registry/2.8.2/tmp /var/lib/kurl ~

Gathering CA from server

Generating a private key and a corresponding Certificate Signing Request (CSR) using OpenSSL

Generating a RSA private key

.................................................................................................................+++++

..........+++++

writing new private key to 'registry.key'

-----

Generating a self-signed X.509 certificate using OpenSSL

Signature ok

subject=CN = registry.kurl.svc.cluster.local

Getting CA Private Key

secret/registry-pki created

/var/lib/kurl ~

✔ Registry configured successfully

- Checking if registry is healthy

waiting for the registry to start

✔ Registry is healthy

configmap/kurl-current-config patched

⚙ Addon prometheus 0.67.1-50.3.1

# Warning: 'patchesJson6902' is deprecated. Please use 'patches' instead. Run 'kustomize edit fix' to update your Kustomization automatically.

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/prometheusagents.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/scrapeconfigs.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com serverside-applied

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com serverside-applied

Error from server (NotFound): deployments.apps "kube-state-metrics" not found

Error from server (NotFound): daemonsets.apps "node-exporter" not found

Error from server (NotFound): deployments.apps "grafana" not found

Error from server (NotFound): deployments.apps "prometheus-adapter" not found

Error from server (NotFound): alertmanagers.monitoring.coreos.com "main" not found

Error from server (NotFound): services "kube-state-metrics" not found

Error from server (NotFound): services "prometheus-operator" not found

Error from server (NotFound): services "grafana" not found

Error from server (NotFound): services "alertmanager-main" not found

Error from server (NotFound): services "prometheus-k8s" not found

# Warning: 'patchesJson6902' is deprecated. Please use 'patches' instead. Run 'kustomize edit fix' to update your Kustomization automatically.

# Warning: 'patchesStrategicMerge' is deprecated. Please use 'patches' instead. Run 'kustomize edit fix' to update your Kustomization automatically.

namespace/monitoring created

serviceaccount/grafana created

serviceaccount/k8s created

serviceaccount/kube-state-metrics created

serviceaccount/prometheus-adapter created

serviceaccount/prometheus-alertmanager created

serviceaccount/prometheus-node-exporter created

serviceaccount/prometheus-operator created

role.rbac.authorization.k8s.io/grafana created

clusterrole.rbac.authorization.k8s.io/grafana-clusterrole created

clusterrole.rbac.authorization.k8s.io/k8s created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter-resource-reader created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter-server-resources created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

rolebinding.rbac.authorization.k8s.io/prometheus-adapter-auth-reader created

rolebinding.rbac.authorization.k8s.io/grafana created

clusterrolebinding.rbac.authorization.k8s.io/grafana-clusterrolebinding created

clusterrolebinding.rbac.authorization.k8s.io/k8s created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter-hpa-controller created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter-resource-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter-system-auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

configmap/grafana created

configmap/grafana-config-dashboards created

configmap/k8s created

configmap/prometheus-adapter created

configmap/prometheus-alertmanager-overview created

configmap/prometheus-apiserver created

configmap/prometheus-cluster-total created

configmap/prometheus-controller-manager created

configmap/prometheus-etcd created

configmap/prometheus-grafana-datasource created

configmap/prometheus-grafana-overview created

configmap/prometheus-k8s-coredns created

configmap/prometheus-k8s-resources-cluster created

configmap/prometheus-k8s-resources-multicluster created

configmap/prometheus-k8s-resources-namespace created

configmap/prometheus-k8s-resources-node created

configmap/prometheus-k8s-resources-pod created

configmap/prometheus-k8s-resources-workload created

configmap/prometheus-k8s-resources-workloads-namespace created

configmap/prometheus-kubelet created

configmap/prometheus-namespace-by-pod created

configmap/prometheus-namespace-by-workload created

configmap/prometheus-node-cluster-rsrc-use created

configmap/prometheus-node-rsrc-use created

configmap/prometheus-nodes created

configmap/prometheus-nodes-darwin created

configmap/prometheus-persistentvolumesusage created

configmap/prometheus-pod-total created

configmap/prometheus-proxy created

configmap/prometheus-scheduler created

configmap/prometheus-workload-total created

secret/alertmanager-prometheus-alertmanager created

secret/grafana-admin created

service/prometheus-coredns created

service/prometheus-kube-controller-manager created

service/prometheus-kube-etcd created

service/prometheus-kube-proxy created

service/prometheus-kube-scheduler created

service/grafana created

service/kube-state-metrics created

service/prometheus-adapter created

service/prometheus-alertmanager created

service/prometheus-k8s created

service/prometheus-node-exporter created

service/prometheus-operator created

deployment.apps/grafana created

deployment.apps/kube-state-metrics created

deployment.apps/prometheus-adapter created

deployment.apps/prometheus-operator created

apiservice.apiregistration.k8s.io/v1beta1.custom.metrics.k8s.io created

daemonset.apps/prometheus-node-exporter created

alertmanager.monitoring.coreos.com/prometheus-alertmanager created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/k8s-operator created

prometheusrule.monitoring.coreos.com/prometheus-alertmanager.rules created

prometheusrule.monitoring.coreos.com/prometheus-config-reloaders created

prometheusrule.monitoring.coreos.com/prometheus-etcd created

prometheusrule.monitoring.coreos.com/prometheus-general.rules created

prometheusrule.monitoring.coreos.com/prometheus-k8s.rules created

prometheusrule.monitoring.coreos.com/prometheus-kube-apiserver-availability.rules created

prometheusrule.monitoring.coreos.com/prometheus-kube-apiserver-burnrate.rules created

prometheusrule.monitoring.coreos.com/prometheus-kube-apiserver-histogram.rules created

prometheusrule.monitoring.coreos.com/prometheus-kube-apiserver-slos created

prometheusrule.monitoring.coreos.com/prometheus-kube-prometheus-general.rules created

prometheusrule.monitoring.coreos.com/prometheus-kube-prometheus-node-recording.rules created

prometheusrule.monitoring.coreos.com/prometheus-kube-scheduler.rules created

prometheusrule.monitoring.coreos.com/prometheus-kube-state-metrics created

prometheusrule.monitoring.coreos.com/prometheus-kubelet.rules created

prometheusrule.monitoring.coreos.com/prometheus-kubernetes-apps created

prometheusrule.monitoring.coreos.com/prometheus-kubernetes-resources created

prometheusrule.monitoring.coreos.com/prometheus-kubernetes-storage created

prometheusrule.monitoring.coreos.com/prometheus-kubernetes-system created

prometheusrule.monitoring.coreos.com/prometheus-kubernetes-system-apiserver created

prometheusrule.monitoring.coreos.com/prometheus-kubernetes-system-controller-manager created

prometheusrule.monitoring.coreos.com/prometheus-kubernetes-system-kube-proxy created

prometheusrule.monitoring.coreos.com/prometheus-kubernetes-system-kubelet created

prometheusrule.monitoring.coreos.com/prometheus-kubernetes-system-scheduler created

prometheusrule.monitoring.coreos.com/prometheus-node-exporter created

prometheusrule.monitoring.coreos.com/prometheus-node-exporter.rules created

prometheusrule.monitoring.coreos.com/prometheus-node-network created

prometheusrule.monitoring.coreos.com/prometheus-node.rules created

servicemonitor.monitoring.coreos.com/grafana created

servicemonitor.monitoring.coreos.com/k8s created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

servicemonitor.monitoring.coreos.com/prometheus-alertmanager created

servicemonitor.monitoring.coreos.com/prometheus-apiserver created

servicemonitor.monitoring.coreos.com/prometheus-coredns created

servicemonitor.monitoring.coreos.com/prometheus-kube-controller-manager created

servicemonitor.monitoring.coreos.com/prometheus-kube-etcd created

servicemonitor.monitoring.coreos.com/prometheus-kube-proxy created

servicemonitor.monitoring.coreos.com/prometheus-kube-scheduler created

servicemonitor.monitoring.coreos.com/prometheus-kubelet created

servicemonitor.monitoring.coreos.com/prometheus-node-exporter created

servicemonitor.monitoring.coreos.com/prometheus-operator created

configmap/kurl-current-config patched

⚙ Persisting the kurl installer spec

configmap/kurl-config created

✔ Kurl installer spec was successfully persisted in the kurl configmap

⚙ Checking proxy configuration with Containerd

Skipping test. No HTTP proxy configuration found.

No resources found

Installation

Complete ✔

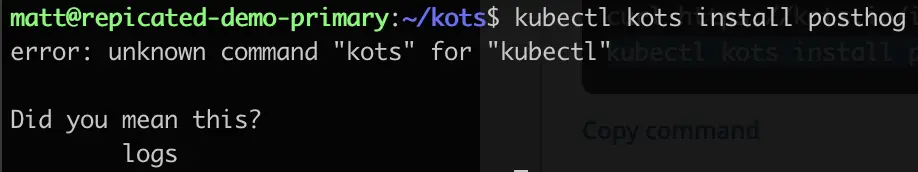

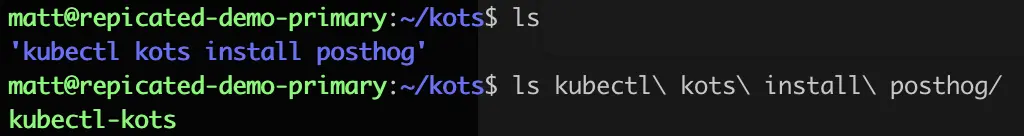

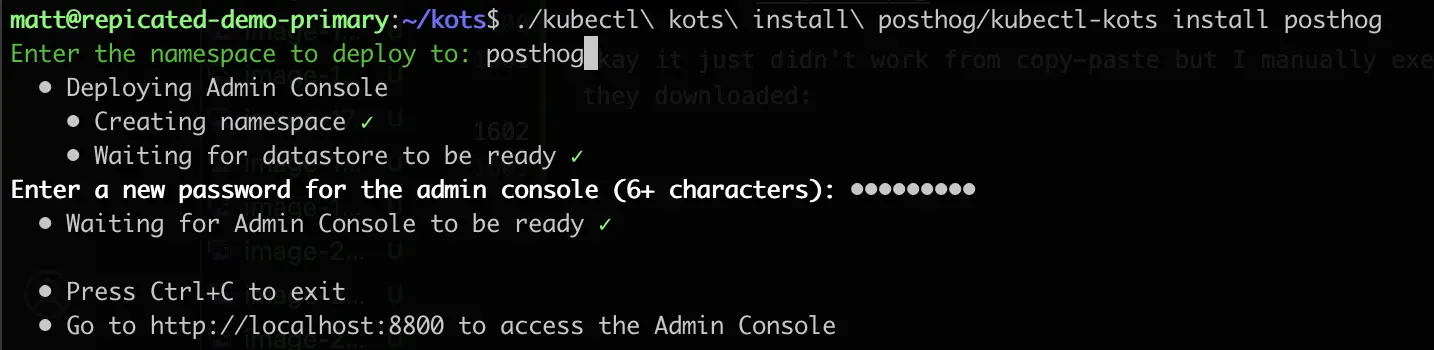

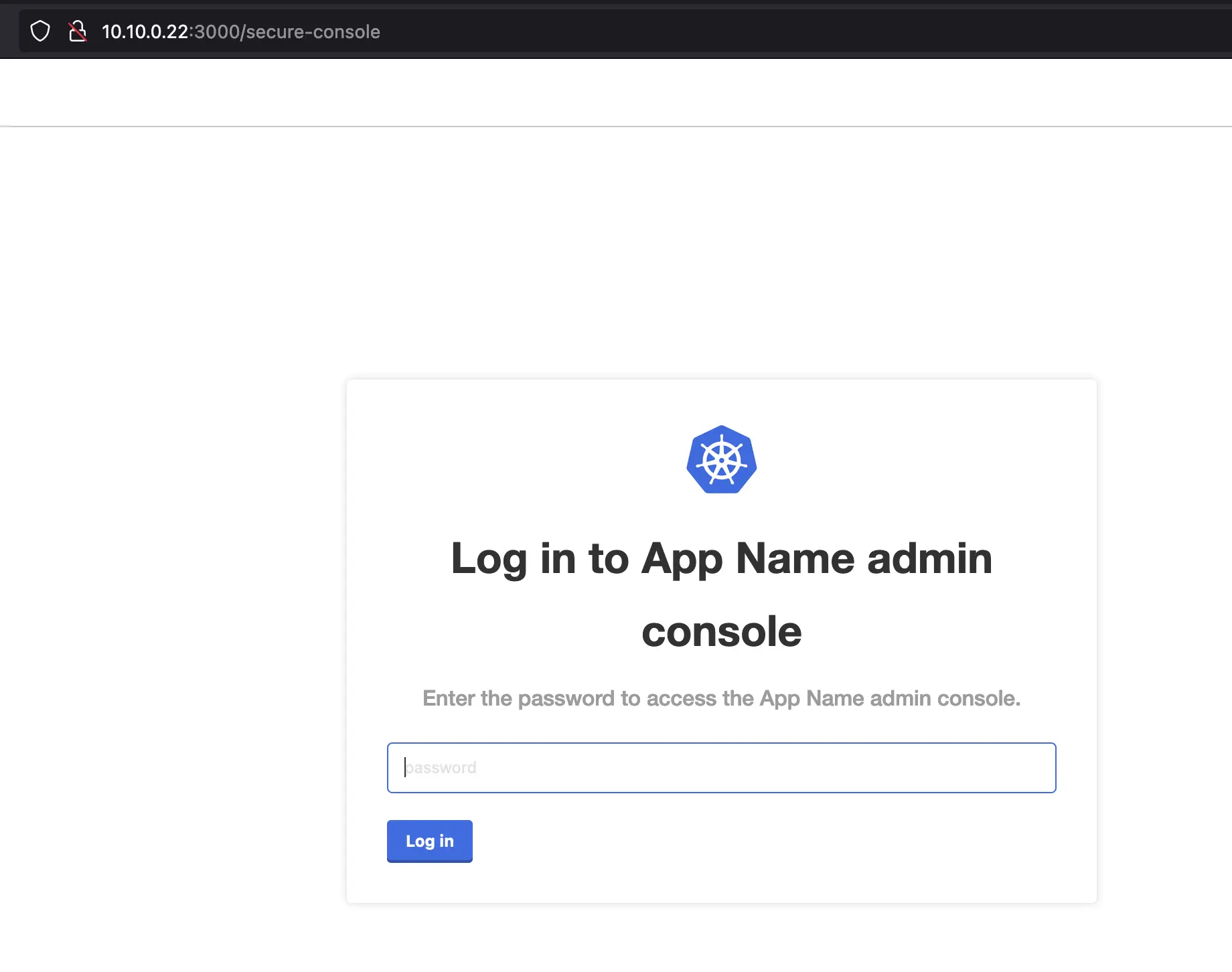

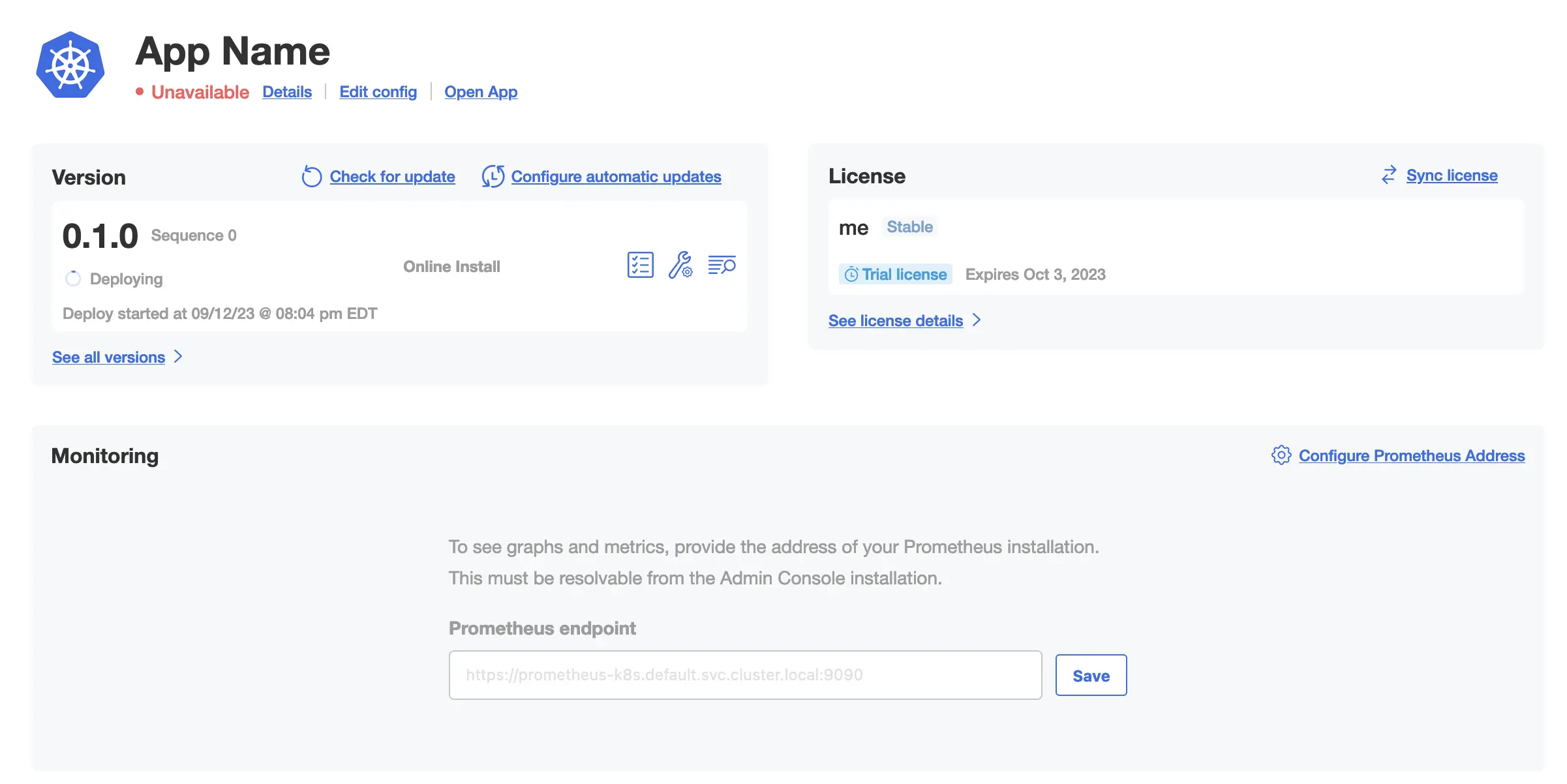

The UIs of Prometheus, Grafana and Alertmanager have been exposed on NodePorts 30900, 30902 and 30903 respectively.